Introduction

In G-SYNC 101: Input Lag & Optimal Settings, Blur Busters showed you how the responsiveness of all games is affected by frame rate, display refresh rate, display lag and the various vertical synchronization technologies.

But when you play an online multiplayer game, then your experience depends on a few more network related factors that I want to tell you more about in this article…

The Internet Is Slow

When an Internet service provider tries to sell you a “high speed internet” plan, then he is only talking about bandwidth, where “faster” means that your downloads and uploads will take less time to finish.

When I now say that the internet is slow, then I am talking about the time that a data packet must travel through the copper and fibre optic cables to reach its destination.

The bandwidth does not affect the speed of a data packet. It will not travel any faster when you upgrade your plan from 10Mbps to 100Mpbs. What does affect the actual speed of the data packet is the “velocity factor” of the copper and fibre optic cables that are used between your PC or console and the server that you connect to.

The velocity factor (VF%) describes the speed at which a signal propagates along the cable, compared with the velocity of light. A category 5e twisted pair network cable has a velocity factor or 64, an optical fibre of around 67.

So this means that developers of online multiplayer games face the issue that the bigger the distance between the players, the longer it will take data to travel between them, which gamers refer to as “lag.”

Measuring the Network Delay

The “ping” is a term that most gamers will have heard about. But how does it work exactly and what does it mean when you have a ping of 20ms?

When you “ping” another networked device, like a game server, then your device sends an “ICMP echo request” to that server, which then sends an “ICMP echo reply” back to your device.

When you then measure the time between sending the request and receiving the answer, then you get the Ping or roundtrip time of the data:

So the ping tells us how long data has to travel through copper and fibre optic cables to reach the other device, and the longer it takes the data to get to its destination, the greater the difference between what you see on your monitor and what the other players see on theirs, which is what we call “lag.”

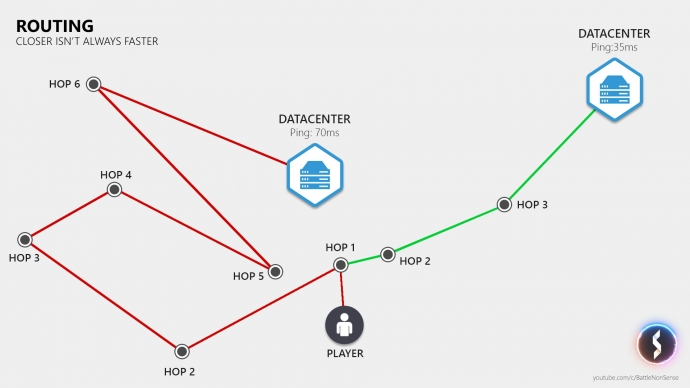

Routing

The distance between networked devices affects how long data must travel between them. However it is possible that your ping to a datacenter 300km away is lower than to a datacenter that is 400km away.

The reason behind this is that the copper and fibre optic cables do not take a direct route, and so the length of the line could be longer to a datacenter that is much closer to you.

Another factor is the number of stops (or hops) that your data packet must make on its way. With every additional hop, there is a risk that your latency increases or that you lose a data packet when one of these hops is maxed out.

There are tools like WTFast, which try to provide you with “faster” connections that have less hops and take a more direct route. However, the effectiveness of these tools depends on your location and your Internet service provider. If you already have very fast/short routes available to you, then these tools will not only be ineffective, but they might even increase your latency. So before you sign up for a paid subscription, you should better test if that tool can decrease the latency of your connection.

Packet Loss – Where did My Data Go?

The internet is not just slow in terms of how long a single bit must travel to reach its destination, there is also not really a guarantee that a data packet will reach its destination.

When a packet disappears, then this is called “packet loss,” and it’s obviously bad when this happens in a realtime application such as an online game, where re-sending that data causes even more delay.

So what causes packet loss?

The issue could already be caused inside your PC or your console by a faulty network interface or broken drivers. Depending on whether you use WiFi or a powerline, interference or congestion can cause packet loss as well, as can a broken network port or network cable.

Packet loss can also be caused by your router when it has a firmware issue, a hardware problem, or when you are running out of upstream or downstream bandwidth, which can cause packets to get dropped.

What might help here is a firmware upgrade, a simple power cycle of the router, or in case your issues are caused by someone else maxing out your entire up or downstream bandwidth, you might want to invest in a router which prioritizes data from real time applications, like the Edge Router X from Ubiquity.

If the packet loss happens outside of your home network, then you can only try and contact the support of your Internet service provider and see if they can help.

It Doesn’t Get Faster Than This

So the length of the route that connects the networked devices (like clients to the server), and the amount of hops between them, affects how long it takes data to reach its destination.

This means that the lag that we experience in a game can never be shorter than the data travel time. So when you have a ping of 20ms to a game server, then it takes your data packets 10ms to reach the server since the ping is the roundtrip time of your data.

The reason why the network delay in any online multiplayer game will never be as low as the round trip time is that there are a few more factors that add an additional delay on top of the travel time of the game data.

Update Rates

What adds an extra delay on top of the travel time of our data is how frequently a game sends and receives it. This means that when a game sends and receives 30 updates per second, then there is more time between updates than when it sends and receives 60 updates per second.

So by increasing the update rates, you can decrease the additional delay that is added on top of the travel time of your data:

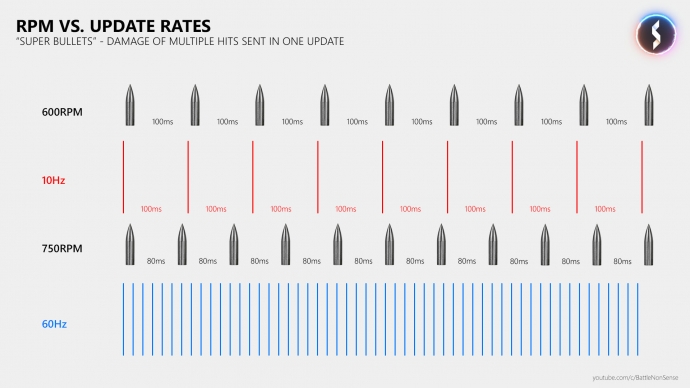

But low update rates do not only affect the network delay, they can even cause issues like “super bullets,” where a player takes too much damage from what appeared to be a single hit.

Let me explain why this happens…

Let’s say that the gameserver sends just 10 updates per second, like many Call of Duty games do when a client is hosting the match. At this update rate, we have 100ms between the data packets, which is the same time that we have between 2 bullets when a gun has firing rate of 600 rounds per minute. So at an update rate of 10Hz, we have one data packet per fired bullet, as long as there is no packet loss and as long as the gun has a firing rate of no more than 600RPM.

But many shooters, including Call of Duty, have guns which shoot 750 rounds per minute, or even more. And so we then have 2 or more bullets per update. This means that when 2 bullets hit a player, then the damage of these 2 hits will be sent in a single update, and so the receiving player will get the experience that he got hit by a “super bullet” that dealt more damage than a single hit from this gun is able to deal.

This should make clear why high update rates are not only required to keep the network delay short, but also to get a consistent online experience, as a little bit of packet loss is less of an issue at high update rates.

Tick Rate

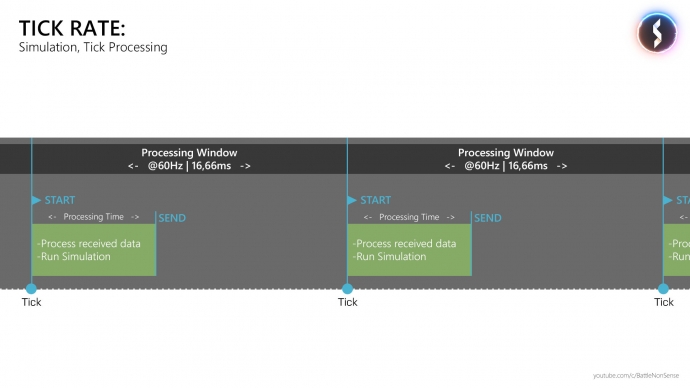

Now where is that data coming from that is sent by the game? This is where the term “tick” or “simulation rate” comes into play, which is how many times per second the game processes and produces data.

So when you have a tick or simulation rate of 60, then this will cause less delay than when you use a tickrate of 30, as a tickrate of 60 will allow the server to send 60 updates per second.

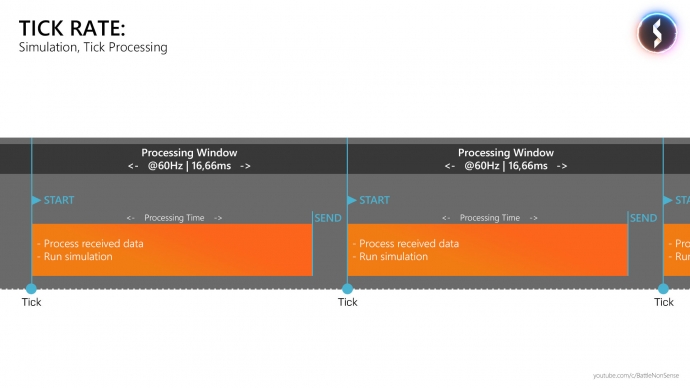

But not only the number of simulations that are done per second is important, it’s also critical that the server finishes a tick as fast as possible, because at a tickrate of 60Hz, it only has a processing window of 16.66ms inside which it must finish a simulation step.

So at the beginning of a tick the server starts to process the data it received and runs its simulations. After that it sends the result to the clients and then sleeps until the next tick happens. The faster the server finishes a tick, the earlier the clients will receive new data from the server, which reduces the delays between players and makes the hit registration feel more responsive.

So when it comes to the server’s performance, it is then imperative that it finishes a simulation step as fast as possible, or at least inside the processing window that is given by the tickrate.

When it gets close to the limit, or even fails to process a tick inside that timeframe, then you will instantly notice this, as it results in all sorts of strange gameplay issues like rubber banding, players teleporting, hits getting rejected, physics failing, etc.

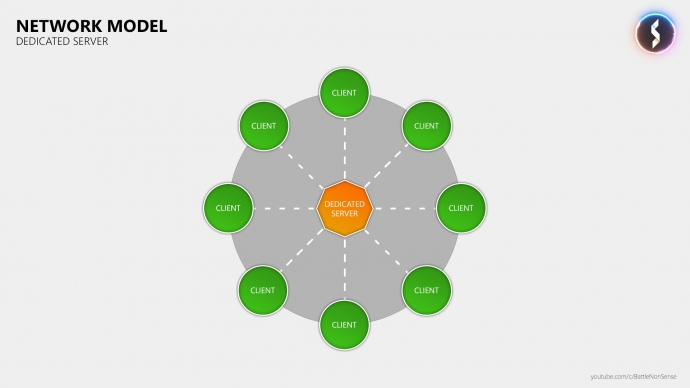

Network Models – Dedicated Gameserver

Simply put, there are 3 different network models which developers can choose from for their multiplayer game. They can rent servers from companies like i3d, or use cloud services like Amazon’s AWS, Microsoft’s Azure, or Google’s Cloud Service to host dedicated game server instances to which the players then connect to:

This means that the game server is running on powerful hardware, and the datacenter provides enough bandwidth to handle all players that connect to it.

Also, anti-cheat and the effect that players with high ping have on the hit registration are easier to handle in this network model.

The downside of dedicated servers is that if you don’t have a game that builds around the idea of the community running (paying for) these servers, then the publisher or game studio must pay for them, and that can get quite expensive.

Another challenge is that if you release your game worldwide, then you must also make sure that all players who bought the game have access to low latency servers. If you do not do that, then you force a lot of people to play at very high ping, and that is a problem for your entire community, not just the players who do not have servers near them.

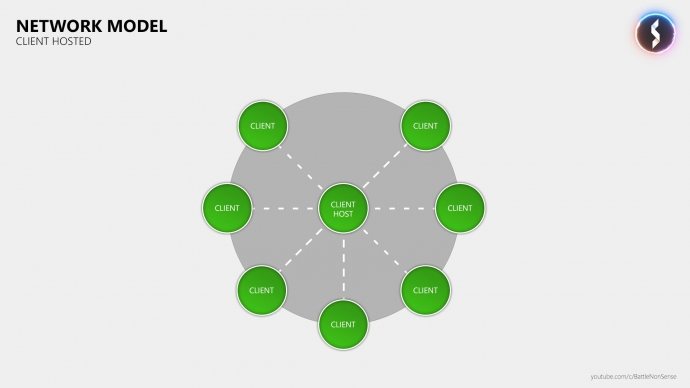

Network Models – Client Hosted (Listen Server)

A different approach, which many people falsely refer to as “Peer-to-Peer,” is that you use the PC or console of one of the players to host the game, which means that he also becomes the server:

With this model, the game studio does not have to pay for expensive dedicated game servers. This also allows players located in a remote region to play with their friends at a relatively low latency (if they all live nearby).

One of the many downsides of this network model is that the player who is also the server gets an advantage because he has 0 lag – this means that he will see you before you see him and he can fire at you before you fire at him.

Then we also have the problem that all players are connected to the host through his consumer grade internet connection, where in the worst case, he could even be using WiFi. This frequently results in a lot of lag, packet loss, rubber banding, and unreliable hit registration. It also limits the update rates of the game, as most residential internet connections can’t handle the stress of hosting a 10+ player match at 60Hz.

But the most frustrating part of such client hosted matches is the host migration, which is the process where the whole game pauses for several seconds while a different player is elected to replace the host that just left.

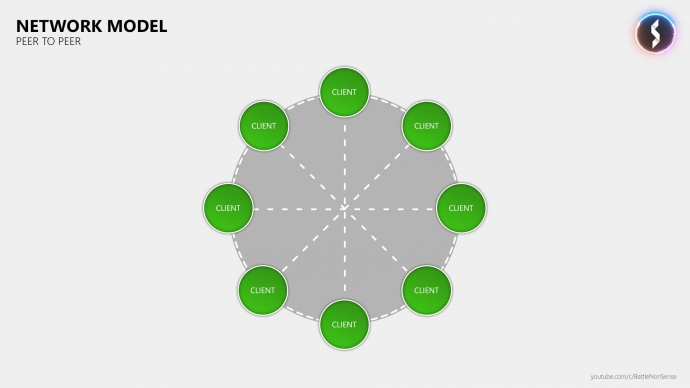

Network Models – Peer-to-Peer

The Peer-to-Peer network model is mostly seen in 1v1 fighting and sports games, but there are also a few other multiplayer games like Destiny and For Honor which use Peer-to-Peer for matches with more than 2 players:

Now while the implementation of the dedicated Server and Client Hosted (Listen Server) network models don’t differ much between games, the same cannot be said about Peer-to-Peer, as there are many different versions, such as where Destiny 1 and 2 even run some of the simulations on dedicated servers.

But one thing that these Peer-to-Peer variations have in common is that all clients directly communicate with each other.

As a result, you can see the WAN IP addresses of all the other players that you are playing with, which streamers especially do not like, as this increases the risk of getting DDoS’ed.

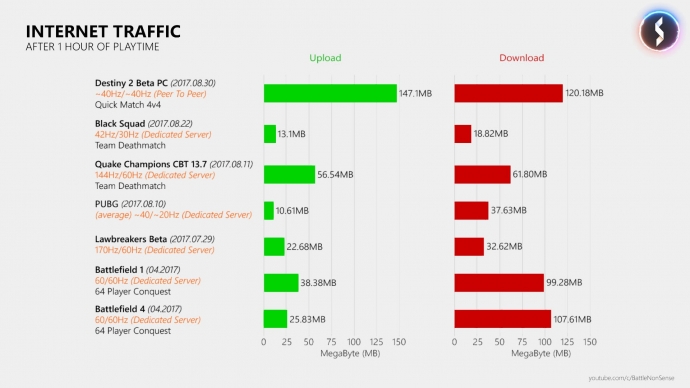

Another problem that we see in games with more than 2 players is that Peer-to-Peer massively increases the network traffic, which can become an issue for a player’s internet connection:

Compared to dedicated gameservers, the developer’s ability to secure the game (anti-cheat) and the handling of players with a high ping is also limited in the Peer-to-Peer network model.

So in 1v1 fighting or sports games, peer 2 peer (GGPO / lock step) is still the way to go because a dedicated game server would just increase the delay, and the client hosted (listen server) network model would give one of the two players an unfair advantage.

But when it comes to any other kinds of fast-paced online multiplayer games with more than 2 players, then only the dedicated gameserver network model is able to provide the bandwidth and processing power required for a high tick rate and high update rates.

When a publisher or developer chooses the client hosted (listen server) or Peer-to-Peer network model over dedicated gameservers for his 5+ player multiplayer game, then he does that to minimize costs, not because it offers a better online experience.

Everyone Lags – Lag Compensation

So every player has a certain amount of latency, which is the result of distance, the amount of hops, the tick rate, and update rates. If the game did not compensate at least a portion of the player’s latency, then hitting other players would already be a problem at a ping of 50ms.

An issue that nearly all first-person shooters are suffering from is that they compensate so much latency, that players with a very low ping receive shots far behind cover. This leads to a bad experience for players who have a stable and low latency connection to the server – Rainbow Six: Siege players surely know what I am talking about here.

DICE went for an interesting solution in Battlefield 1. There the game server will only compensate the players latency until a certain threshold is reached (which can be set by the developers). Once the player’s ping/latency exceeds this threshold, he must start to lead his shots to compensate for the additional latency. This mitigates the issue where players with a low latency connection receive hits very far behind cover.

The following video shows this mechanic in action:

One thing that I want to stress again is that publishers and studios must put more effort into server coverage. The reason why some games have many players with very high ping is down to the fact that the publisher/studio does not provide servers in every region they sell their game in, which means that many players simply don’t have access to servers with less than a 150ms ping. And that’s unacceptable, at least in my opinion.

Now How About Netcode?

Netcode is a layman’s term used by gamers and developers alike when they discuss topics related to the networking of a game. Basically, everything in this article all falls under “netcode.”

So congratulations! You now have a basic understanding of how the networking is done in online multiplayer games and what affects the delay that you experience in these games. With this knowledge, you can now provide developers with good feedback about network related issues in their games, and you are able to counter the argument that “30Hz is good enough.” ?

If you want to know how good or bad the “netcode” of a specific multiplayer game is, then you might be interested in my YouTube channel where I regularly release netcode analysis videos for new multiplayer games: www.youtube.com/c/battlenonsense.

I’d also like to thank Blur Busters for the opportunity to write this article, and I want to thank you for your attention and interest in this subject!

Have a great day!

-Chris Battle(non)sense