“Let me get this straight…”

“Closing FAQ” published Jan 25, 2019

G-SYNC 101 has been around long enough now to have accumulated some frequently asked follow-up questions from readers, both in the comments sections here and in the Blur Busters Forums.

To avoid further confusion or repeat questions, I have compiled a selection of them below for easier access. This section may change and grow as time goes by, so check back here regularly for updates before asking your question.

Do your “Optimal G-SYNC Settings” work for “G-SYNC Compatible” FreeSync monitors?

(LAST UPDATED 12/07/2023: adds support for 7 newly validated displays)Yes, on officially supported “G-SYNC Compatible” FreeSync monitors (see Nvidia’s G-SYNC Monitors list), my recommendation to limit the FPS (a safe -3 minimum) below the max refresh rate, and to use G-SYNC + V-SYNC “On” to eliminate tearing 100% of the time, still applies.

Below is list of links relating to “G-SYNC Compatible” functionality. This FAQ entry will continue to be updated whenever new information becomes available.

“G-SYNC Compatible” FreeSync Monitor Resources

Official:

- Nvidia: “Support for [32] Newly Validated G-SYNC Compatible Displays”

- Nvidia: “Support for Newly Validated G-SYNC Compatible Displays: ASUS PG49WCD, ASUS PG34WCDM, ASUS VG27AQM1A, Corsair 315QHD165, LG 27GR95QE, LG 27GR95UM, Philips 27M1N5500ZR

- Nvidia: “Support For Newly Validated G-SYNC Compatible Displays” AOC U27G3X, AOC AG275QA1, I-O DATA GDQ271JA, LG 27GR93U, LG 27GR750Q, LG 38WR850C-W

- Nvidia: “Support For Newly Validated G-SYNC Compatible Displays” AOC AG275QZ, AOC AG275QXP, AOC AG275QXPD, ASUS VG27AQL3A, ASUS VG279QM1A, Philips TV 42OLED808, Samsung Odyssey G93SC, Samsung Odyssey G95SC

- Nvidia: “Support for Newly Validated G-SYNC Compatible Displays” ASUS ROG XG259CM, Galaxy VI-01, Samsung G95NA

- Nvidia: “Support for Newly Validated G-SYNC Compatible Displays” Acer XB283KV, ASUS VG27AC1A, AOC AG274QS8R1B, I-O DATA GC241UXD, MSI G274, MSI MAG281URF, Philips 32M1N5800, Philips PHL276M1RPE, Philips PHL32M1N5500Z, Philips PHL32M1N5800A, ViewSonic VP2776

- Nvidia: “Support for Newly Validated G-SYNC Compatible Displays” AOC AG274FG8R4+, AOC AG274QG3R4B+, ASUS VG258QM, ASUS PG32UQ, Lenovo G24-20, MSI G251F, ViewSonic XG320Q

- Nvidia: “Support for Newly Validated G-SYNC Compatible Displays” AOC 24G2W1G8, AOC AG274US4R6B, ASUS XG349C, Philips 279M1RV, Samsung LC27G50A

- Nvidia: “Support for Newly Validated G-SYNC Compatible Displays” LG 27GP950, LG 2021 B1 4K Series, LG 2021 C1 4K Series, LG 2021 G1 4K Series, LG 2021 Z1 8K Series, MSI MAG301RF

- Nvidia: “Support for Newly Validated G-SYNC Compatible Displays” Acer XV253Q GW, ASUS VG27AQ1A, LG 27GN800, LG 27GN600, ViwSonic XG270Q

- Nvidia: “GeForce Hotfix Driver Version 451.85,” “[G-Sync Compatible] Adds support for the Samsung 27″ Odyssey G7 gaming monitor”

- Nvidia: “Support for Newly Validated G-SYNC Compatible Displays” Dell S2721HGF, Dell S2721DGF, Lenovo G25-10

- Nvidia: “Support For Newly Validated G-SYNC Compatible Displays” AOC AG273F1G8R3, ASUS VG27AQL1A, Dell S2421HGF, Lenovo G24-10, LG 27GN950, LG 32GN50T/32GN500, Samsung 2020 Odyssey G9, Samsung 2020 Odyssey G7 (27″), Samsung 2020 Odyssey G7 (32″)

- Nvidia: “Support For Newly Validated G-SYNC Compatible Displays” Acer XB273GP, Acer XB323U, and ASUS VG27B

- Nvidia: “Support For Newly Validated G-SYNC Compatible Displays” AOC AG271FZ2, AOC AG271F1G2, and ASUS PG43U

- Nvidia: “Support For Newly Validated G-SYNC Compatible Displays” ASUS VG259QM, Dell AW2521HF, and LG 34GN850

- Nvidia: “[…] Over Two-Dozen New G-SYNC Compatible Gaming Monitors Supported”

- Nvidia: “Support For Newly Validated G-SYNC Compatible Displays” MSI MAG251RX and ViewSonic XG270

- Nvidia: “Support For Newly Validated G-SYNC Compatible Displays” ACER XB273U, ACER XV273U and ASUS VG259Q

- Nvidia: “[…] Support For 7 New G-SYNC Compatible Gaming Monitors” Acer CG437K P, Acer VG272U P, Acer VG272X, AOC 27G2G4, ASUS XG279Q, Dell AW2720HF, and Lenovo Y27Q-20

- Nvidia: 6 newly-validated G-SYNC Compatible monitors “ACER VG252Q, ACER XV273 X, GIGABYTE AORUS FI27Q, GIGABYTE FI27Q-P, LG 27GL650, and LG 27GL63T”

- Nvidia: “New G-SYNC Compatible Displays” LG 34GL750, HP 25mx, and HP Omen X 25f

- Nvidia: “[…] Includes Support For G-SYNC Compatible Monitors” Dell S2419HGF, HP X25, and LG 27GL850

- Nvidia: “G-SYNC Compatible Testing, Phase 1 Complete: Only 5% of Adaptive-Sync Monitors Made The Cut”

- Nvidia: “[7] More Gaming Monitors Get G-SYNC Compatible Validation”

- Nvidia: Adds support for “G-SYNC Compatible Monitors [..]” ASUS VG278QR and ASUS VG258

- Nvidia: “[..] Adds Support For New G-SYNC Compatible Gaming Monitors” Acer ED273 A, Acer XF250Q, and BenQ XL2540-B / ZOWIE XL LCD

- Nvidia: Initial “G-SYNC Compatible” for FreeSync monitors announcement

- Nvidia: Official “G-SYNC and G-SYNC Compatible Gaming Monitors” list

- Nvidia: “What are the system requirements for G-sync Compatible display technology?”

- Nvidia: “Do G-SYNC Compatible displays work on both DisplayPort and HDMI with GeForce GPUs?”

- Nvidia: “I own an Adaptive-Sync monitor that is not on your G-SYNC Compatible list. Can I enable variable refresh rate anyways?”

- Nvidia: “Do NVIDIA GeForce display drivers support frame doubling on G-SYNC Compatible displays?”

Third Party:

- MSI Tested: “MSI’s monitors are now NVIDIA G-Sync Compatible!”

- Battle(non)sense “FreeSync vs. G-Sync Compatible” input lag tests

- Digital Foundry’s “Best FreeSync and ‘G-Sync compatible’ monitors [guide] for Nvidia graphics cards”

- Unofficial “G-SYNC Compatible” monitor list

- Unofficial “G-SYNC Compatible” monitor thread (reddit)

Wait, why should I enable V-SYNC with G-SYNC again? And why am I still seeing tearing with G-SYNC enabled and V-SYNC disabled? Isn’t G-SYNC suppose to fix that?

(LAST UPDATED: 05/02/2019)The answer is frametime variances.

“Frametime” denotes how long a single frame takes to render. “Framerate” is the totaled average of each frame’s render time within a one second period.

At 144Hz, a single frame takes 6.9ms to display (the number of which depends on the max refresh rate of the display, see here), so if the framerate is 144 per second, then the average frametime of 144 FPS is 6.9ms per frame.

In reality, however, frametime from frame to frame varies, so just because an average framerate of 144 per second has an average frametime of 6.9ms per frame, doesn’t mean all 144 of those frames in each second amount to an exact 6.9ms per; one frame could render in 10ms, the next could render in 6ms, but at the end of each second, enough will hit the 6.9ms render target to average 144 FPS per.

So what happens when just one of those 144 frames renders in, say, 6.8ms (146 FPS average) instead of 6.9ms (144 FPS average) at 144Hz? The affected frame becomes ready too early, and begins to scan itself into the current “scanout” cycle (the process that physically draws each frame, pixel by pixel, left to right, top to bottom on-screen) before the previous frame has a chance to fully display (a.k.a. tearing).

G-SYNC + V-SYNC “Off” allows these instances to occur, even within the G-SYNC range, whereas G-SYNC + V-SYNC “On” (what I call “frametime compensation” in this article) allows the module (with average framerates within the G-SYNC range) to time delivery of the affected frames to the start of the next scanout cycle, which lets the previous frame finish in the existing cycle, and thus prevents tearing in all instances.

And since G-SYNC + V-SYNC “On” only holds onto the affected frames for whatever time it takes the previous frame to complete its display, virtually no input lag is added; the only input lag advantage G-SYNC + V-SYNC “Off” has over G-SYNC + V-SYNC “On” is literally the tearing seen, nothing more.

For further explanations on this subject see part 1 “Control Panel,” part 4 “Range,” and part 6 “G-SYNC vs. V-SYNC OFF w/FPS Limit” of this article, or read the excerpts below…

In part 1 “Control Panel“:

Upon its release, G-SYNC’s ability to fall back on fixed refresh rate V-SYNC behavior when exceeding the maximum refresh rate of the display was built-in and non-optional. A 2015 driver update later exposed the option.

This update led to recurring confusion, creating a misconception that G-SYNC and V-SYNC are entirely separate options. However, with G-SYNC enabled, the “Vertical sync” option in the control panel no longer acts as V-SYNC, and actually dictates whether, one, the G-SYNC module compensates for frametime variances output by the system (which prevents tearing at all times. G-SYNC + V-SYNC “Off” disables this behavior; see G-SYNC 101: Range), and two, whether G-SYNC falls back on fixed refresh rate V-SYNC behavior; if V-SYNC is “On,” G-SYNC will revert to V-SYNC behavior above its range, if V-SYNC is “Off,” G-SYNC will disable above its range, and tearing will begin display wide.

Within its range, G-SYNC is the only syncing method active, no matter the V-SYNC “On” or “Off” setting.

In part 4 “Range“:

G-SYNC + V-SYNC “Off”:

The tearing inside the G-SYNC range with V-SYNC “Off” is caused by sudden frametime variances output by the system, which will vary in severity and frequency depending on both the efficiency of the given game engine, and the system’s ability (or inability) to deliver consistent frametimes.G-SYNC + V-SYNC “Off” disables the G-SYNC module’s ability to compensate for sudden frametime variances, meaning, instead of aligning the next frame scan to the next scanout (the process that physically draws each frame, pixel by pixel, left to right, top to bottom on-screen), G-SYNC + V-SYNC “Off” will opt to start the next frame scan in the current scanout instead. This results in simultaneous delivery of more than one frame in a single scanout (tearing).

In the Upper FPS range, tearing will be limited to the bottom of the display. In the Lower FPS range (<36) where frametime spikes can occur (see What are Frametime Spikes?), full tearing will begin.

Without frametime compensation, G-SYNC functionality with V-SYNC “Off” is effectively “Adaptive G-SYNC,” and should be avoided for a tear-free experience (see G-SYNC 101: Optimal Settings & Conclusion).

And:

G-SYNC + V-SYNC “On”:

This is how G-SYNC was originally intended to function. Unlike G-SYNC + V-SYNC “Off,” G-SYNC + V-SYNC “On” allows the G-SYNC module to compensate for sudden frametime variances by adhering to the scanout, which ensures the affected frame scan will complete in the current scanout before the next frame scan and scanout begin. This eliminates tearing within the G-SYNC range, in spite of the frametime variances encountered.Frametime compensation with V-SYNC “On” is performed during the vertical blanking interval (the span between the previous and next frame scan), and, as such, does not delay single frame delivery within the G-SYNC range and is recommended for a tear-free experience (see G-SYNC 101: Optimal Settings & Conclusion).

Finally, in part 6 “G-SYNC vs. V-SYNC OFF w/FPS Limit“:

As noted in G-SYNC 101: Range, G-SYNC + VSYNC “Off” (a.k.a. Adaptive G-SYNC) can have a slight input lag reduction over G-SYNC + V-SYNC as well, since it will opt for tearing instead of aligning the next frame scan to the next scanout when sudden frametime variances occur.

To eliminate tearing, G-SYNC + VSYNC is limited to completing a single frame scan per scanout, and it must follow the scanout from top to bottom, without exception. On paper, this can give the impression that G-SYNC + V-SYNC has an increase in latency over the other two methods. However, the delivery of a single, complete frame with G-SYNC + V-SYNC is actually the lowest possible, or neutral speed, and the advantage seen with V-SYNC OFF is the negative reduction in delivery speed, due to its ability to defeat the scanout.

Bottom-line, within its range, G-SYNC + V-SYNC delivers single, tear-free frames to the display the fastest the scanout allows; any faster, and tearing would be introduced.

I still don’t get it, then why do I need an FPS limit with G-SYNC, and why does the limit have to be below the refresh rate? Why not “at” it?

(LAST UPDATED: 05/02/2019)G-SYNC adjusts the refresh rate to the framerate. If the framerate reaches or exceeds the max refresh rate at any point, G-SYNC no longer has anything to adjust, at which point it reverts to V-SYNC behavior (G-SYNC + V-SYNC “On”) or screen-wide tearing (G-SYNC + V-SYNC “Off”).

As for why a minimum of 2 FPS (and a recommendation of at least 3 FPS) below the max refresh rate is required to stay within the G-SYNC range, it’s because frametime variances output by the system can cause FPS limiters (both in-game and external) to occasionally “overshoot” the set limit (the same reason tearing is caused in the upper FPS range with G-SYNC + V-SYNC “Off”), which is why an “at” max refresh rate FPS limit (see part 5 “G-SYNC Ceiling vs. FPS Limit” for input lag test numbers) typically isn’t sufficient in keeping the framerate within the G-SYNC range at all times.

Alright, I now understand why the V-SYNC option and a framerate limiter is recommended with G-SYNC enabled, but why use both?

(ADDED: 05/02/2019)Because, with G-SYNC enabled, each performs a role the other cannot:

- Enabling the V-SYNC option is recommended to 100% prevent tearing in both the very upper (frametime variances) and very lower (frametime spikes) G-SYNC range. However, unlike framerate limiters, enabling the V-SYNC option will not keep the framerate within the G-SYNC range at all time.

- Setting a minimum -3 FPS limit below the max refresh rate is recommended to keep the framerate within the G-SYNC range at all times, preventing double buffer V-SYNC behavior (and adjoining input lag) with G-SYNC + V-SYNC “On,” or screen-wide tearing (and complete disengagement of G-SYNC) with G-SYNC + V-SYNC “Off” whenever the framerate reaches or exceeds the max refresh rate. However, unlike the V-SYNC option, framerate limiters will not prevent tearing.

Your “Optimal G-SYNC Settings” say I should only “Enable [G-SYNC] for full screen mode” in the NVCP, but what about for games that don’t offer an exclusive fullscreen option?

(LAST UPDATED: 10/09/2022)My Optimal G-SYNC Settings are just that: optimal.

Thus, while G-SYNC (and any game with any syncing solution, for that matter) typically performs best in exclusive fullscreen, it is true that not all games support this mode, so use of G-SYNC’s “Enable for windowed and fullscreen mode” is necessary for games that only offer a borderless or windowed option.

Do note, however, that G-SYNC’s “Enable for windowed and full screen mode” can apply to non-game apps as well, which will result in stutter and slowdown when affected app windows are dragged and/or focused on due to unintended VRR (variable refresh rate) behavior.

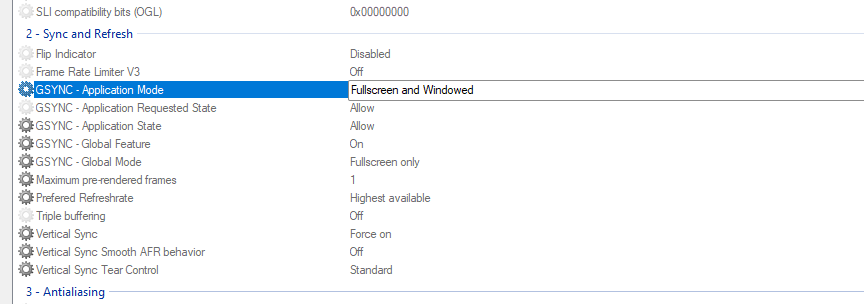

As such, it is recommended to keep G-SYNC set to “Enable for full screen mode” globally, and then to use Nvidia Profile Inspector (download here) to set “G-SYNC – Application Mode” to “Fullscreen and Windowed” per game profile, as needed:

What exactly does the “Maximum pre-rendered frames” setting do again? Doesn’t it affect input lag?

(LAST UPDATED: 10/29/2019)NOTE: As of Nvidia driver version 436.02, “Maximum pre-rendered frames” is now labeled “Low Latency Mode,” with “On” being equivalent to MPRF “1.”

While this setting was already covered in part 14 “Optimal G-SYNC Settings & Conclusion” under a section titled “Maximum Pre-rendered Frames: Depends,” let’s break it down again…

The pre-rendered frames queue is effectively a CPU-side throttle for average framerate, and the “Maximum pre-rendered frames” setting controls the queue size.

Higher values increase the maximum amount of pre-rendered frames able to generate at once, which, in turn, typically improves frametime performance and allows higher average framerates on weaker CPUs by giving them more time to prepare frames before handing them off to the GPU to be completed.

So while the “Maximum pre-rendered frames” setting does introduce more buffers at higher values, its original intended function is less about being a direct input lag modifier (as is commonly assumed), and more about allowing weaker systems to run demanding games more smoothly, and reach higher average framerates than they would otherwise be able to (if at all).

On a system where the power of the CPU and GPU are more matched, a “Maximum pre-rendered frames” value of “1” is typically recommended, and usually causes little to no negative effects, of which would be evident by a lower average framerate and/or increased frametime spikes.

Finally, it should be noted that the NVCP’s MPRF setting isn’t respected by every game, and even where it is, MPRF results may vary per system and/or per game, so user-experimentation is required.

Is the RTSS FPS limiter always active when set?

No.

The RTSS FPS limiter (or any FPS limiter for that matter, in-game or external) is only active when the framerate is sustained above the set FPS limit. Whenever the framerate drops below the set limit, the limiter is effectively inactive until the framerate is once again sustained above it.

Does the RTSS FPS limiter really “add” 1 frame of input lag?

Technically? No, the RTSS FPS limiter is ultimately neutral input lag-wise.

Okay, so wait a second, then why in part 11 of this article (“In-game vs. External FPS Limiters“) do I state in the last paragraph (highlighted in bold) that:

Needless to say, even if an in-game framerate limiter isn’t available, RTSS only introduces up to 1 frame of delay, which is still preferable to the 2+ frame delay added by Nvidia’s limiter with G-SYNC enabled, and a far superior alternative to the 2-6 frame delay added by uncapped G-SYNC.

Because while the RTSS limiter does have up to 1 frame of input lag, it ONLY does when directly compared to an in-game limiter.

When directly compared to uncapped at the same framerate within the G-SYNC range (e.g. within the refresh rate), an RTSS FPS limit can actually have less input lag.

Why? It all comes down to the pre-rendered frames queue…

With a fluctuating, uncapped framerate, there is no guaranteed frametime target, and without a guaranteed frametime target, the pre-rendered frames queue must step in as a fallback to ensure average framerate delivery remain as smooth and as high as possible, primarily by always having at least one “next” frame ready to be delivered.

However, with an FPS limit (RTSS or in-game), so long as the framerate can be sustained above the set limit, a constant framerate/frametime target is now ensured, and the pre-rendered frames queue effectively becomes “0,” until at any point the framerate falls below the set limit, where the queue once again takes effect.

Thus, a framerate limited by RTSS can actually have 1 frame less input lag than the same uncapped framerate does with “Maximum pre-rendered frames” at “1.”

Okay, so then why exactly do in-game FPS limiters have less input lag than external FPS limiters again?

In-game FPS limiters (G-SYNC or no G-SYNC) almost always have less input lag than external FPS limiters because they can set an average FPS target at the engine-level (and let frametime run free) during the actual calculation of new frames, whereas external limiters can only set a fixed frametime target, and only after new frames have been calculated by the engine.

With RTSS, framerate limiting via a frametime target means its limiter, while slightly higher latency, is steadier when directly compared to in-game limiters. This actually makes RTSS better suited to non-VRR (variable refresh rate) syncing methods, such as standalone V-SYNC, where steadier frametimes are vital in minimizing the occurrence of mismatched synchronization between the GPU and display below the refresh rate.

For G-SYNC however, with its frametime compensation mechanism (G-SYNC + V-SYNC “On”), and it’s ability to adjust the refresh rate to the framerate down to the decimal level, smaller frametime variances aren’t as important, and thus, an in-game limiter is typically recommended for the lowest input lag possible.

Should I “Disable fullscreen optimizations” per game .exe when using G-SYNC?

(UPDATED: 11/22/2020)Globally? No.

Selectively? If desired.

Why?

For games set to exclusive fullscreen mode, Windows 10 fullscreen optimizations engages (at default) a hybrid borderless/exclusive fullscreen state that allows borderless fullscreen functionality (such as the OS to display elements like the volume slider and taskbar over the game’s window, and allow alt+tab transitions without delay) while reportedly retaining the advantages of exclusive fullscreen, which typically allows slightly higher average framerate performance, and steadier frametimes over borderless fullscreen.

Whether this hybrid borderless/exclusive mode truly is the “best of both,” remains to be fully confirmed, and has never been thoroughly tested, which is why many users disable fullscreen optimizations when pairing G-SYNC with exclusive fullscreen just to rule out any possible unknown and/or unwanted behaviors.

The majority of games featuring DX11 and up (as well as Vulkan and OpenGL titles) appear to allow G-SYNC functionality whether fullscreen optimizations are enabled or disabled,

but as of the latest Windows 10 version, if fullscreen optimizations are disabled in certain (especially older and/or Unity engine-based) games featuring DX9 or lower, G-SYNC may not function in exclusive fullscreen at all.Update: this particular issue has been amended in the more recent Windows 10 releases.So while it’s usually safe to disable this option for most games, it should first be verified that G-SYNC is functioning (either via the “G-SYNC indicator” setting in NVCP, or built-in refresh rate meter on supported monitors) when the “Disable fullscreen optimizations” checkbox is “checked” per game exe.

Finally, the fullscreen optimizations setting (short of possible DPI and/or DSR scaling quirks in some instances when enabled) appears to have no effect on G-SYNC functionality with games set to borderless or windowed mode.