Test Setup

| High Speed Camera | Casio Exilim EX-ZR200 w/1000 FPS 224x64px video capture |

| Display | Acer Predator XB252Q 240Hz w/G-SYNC (1920×1080) |

| Mouse | Razer Deathadder Chroma modified w/external LED |

| . | |

| Nvidia Driver | 381.78 |

| Nvidia Control Panel | Default settings (“Prefer maximum performance” enabled) |

| . | |

| OS | Windows 10 Home 64-bit (Creators Update) |

| Motherboard | ASRock Z87 Extreme4 |

| Power Supply | EVGA SuperNOVA 750 W G2 |

| Heatsink | Hyper 212 Evo w/2x Noctua NF-F12 fans |

| CPU | i7-4770k @4.2GHz w/Hyper-Threading enabled (8 cores, unparked: 4 physical/4 virtual) |

| GPU | EVGA GTX 1080 FTW GAMING ACX 3.0 w/8GB VRAM & 1975MHz Boost Clock |

| Sound Card | Creative Sound Blaster Z (optical audio) |

| RAM | 16GB G.SKILL Sniper DDR3 @1866 MHz (dual-channel: 9-10-9-28, 2T) |

| SSD (OS) | 256GB Samsung 850 Pro |

| HDD (Games) | 5TB Western Digital Black 7200 RPM w/128 MB cache |

| . | |

| Test Game #1 | Overwatch w/lowest settings, “Reduced Buffering” enabled |

| Test Game #2 | Counter-Strike: Global Offensive w/lowest settings, “Multicore Rendering” disabled |

Introduction

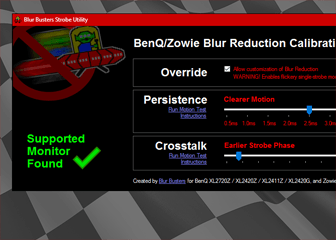

The input lag testing method used in this article was pioneered by Blur Buster’s Mark Rejhon, and originally featured in his 2014 Preview of NVIDIA G-SYNC, Part #2 (Input Lag) article. It has become the standard among testers since, and is used by a variety of sources across the web.

Middle Screen vs. First On-screen Reaction

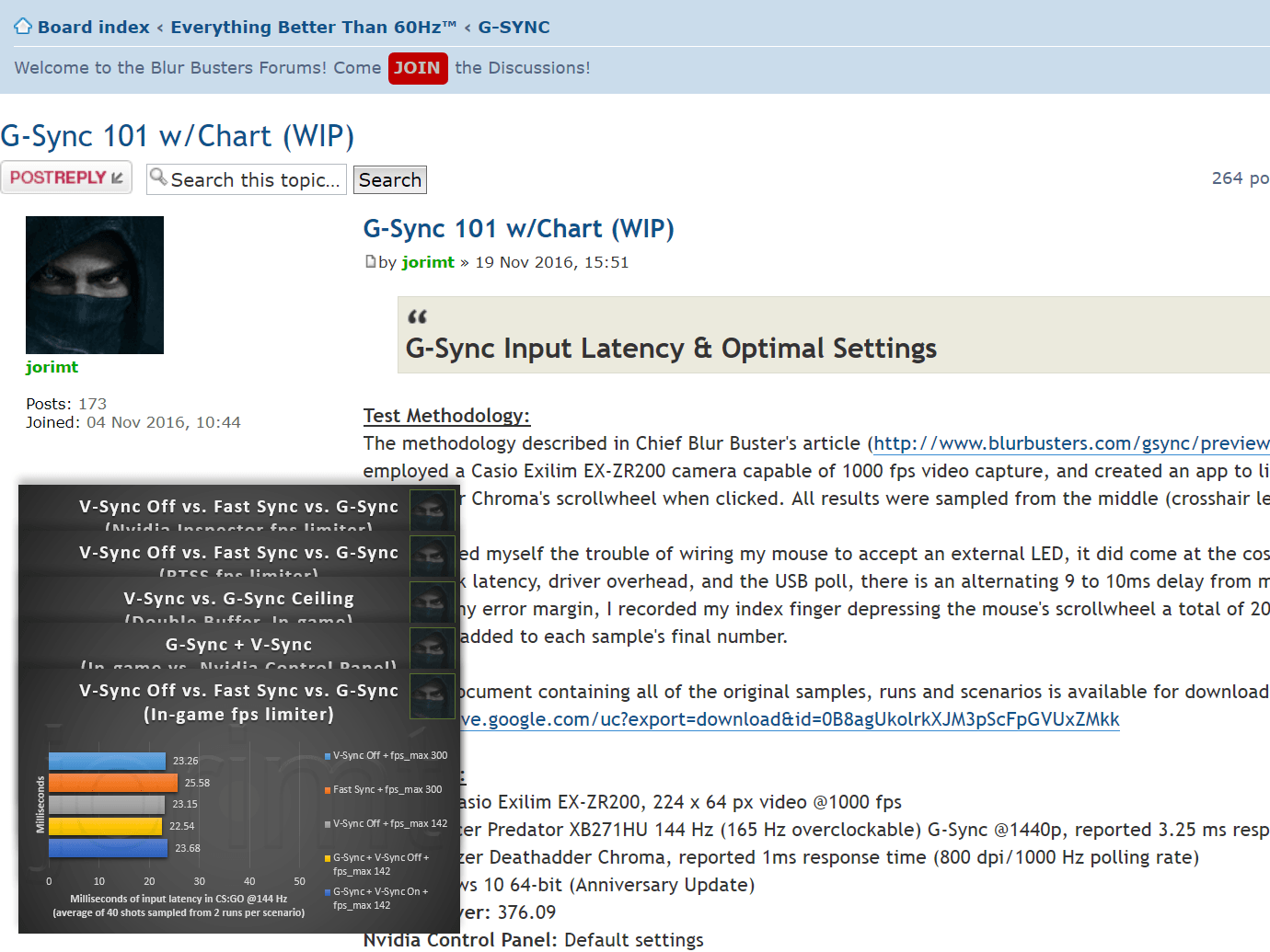

In my original input lag tests featured in this thread on the Blur Busters Forums, I measured middle screen (crosshair-level) reactions at a single refresh rate (144Hz), and found that both V-SYNC OFF and G-SYNC, at the same framerate within the refresh rate, delivered frames to the middle of the screen at virtually the same time. This still holds true.

However, while middle screen measurements are a common and fully valid input lag testing method, they are limited in what they can reveal, and do not account for the first on-screen reaction, which can mask the subtle and not so subtle differences in frame delivery between V-SYNC OFF and various syncing solutions; a reason why I opted to capture the entire screen this time around.

Due to the differences between the two test methods, V-SYNC OFF results generated from first on-screen measurements, especially at lower refresh rates (for reasons that will later be explained), can appear to have up to twice the input lag reduction of middle screen readings:

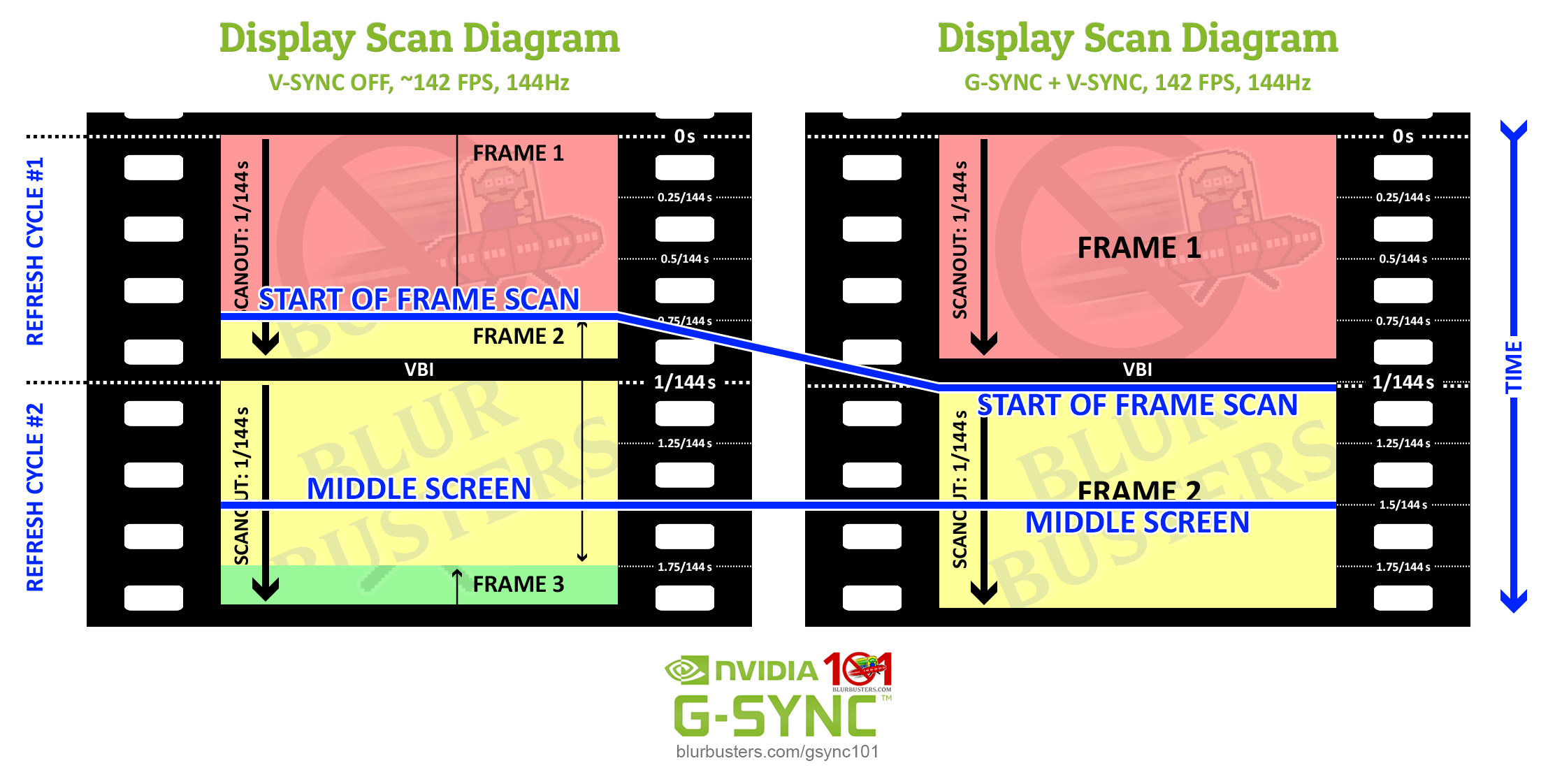

As the diagram shows, this is because the measurement of the first on-screen reaction is begun at the start of the frame scan, whereas the measurement of the middle screen reaction is begun at crosshair-level, where, with G-SYNC, the in-progress frame scan is already half completed, and with V-SYNC OFF, can be at various percentages of completion, depending on the given refresh rate/framerate offset.

When V-SYNC OFF is directly compared to FPS-limited G-SYNC at crosshair-level, even with V-SYNC OFF’s framerate at up to 3x times above the refresh rate, middle screen readings are virtually a wash (the results in this article included). But, as will be detailed further in, V-SYNC OFF can, for a lack of better term, “defeat” the scanout by beginning the next frame scan in the previous scanout.

With V-SYNC OFF at -2 FPS below the refresh rate, for instance (the scenario used to compare V-SYNC OFF directly against G-SYNC in this article), the tearline will continuously roll downwards, which means, when measured by first on-screen reactions, its advantage over G-SYNC can be anywhere from 0 to 1/2 frame, depending on the ever-fluctuating position of the tearline between samples. With middle screen readings, the initial position of the tearline(s), and thus, its advantage, is effectively ignored.

These differences should be kept in mind when inspecting the upcoming results, with the method featured in this article being the best case scenario for V-SYNC OFF, and the worst case scenario for synced when directly compared to V-SYNC OFF, G-SYNC included.

Test Methodology

To further facilitate the first on-screen reaction method, I’ve changed sample capture from muzzle flash to strafe for Overwatch (credit goes to Battle(non)sense for the initial suggestion) and look for CS:GO, which triggers horizontal updates across the entire screen. The strafe/look mechanics are also more consistent from click to click, and less prone to the built-in variable delay experienced from shot to shot with the previous method.

To ensure a proper control environment for testing, and rule out as many variables as possible, the Nvidia Control Panel settings (but for “Power management mode” set to “Prefer maximum performance”) were left at defaults, all background programs were closed, and all overlays were disabled, as was the Creators Update’s newly introduced “Game Mode,” and .exe Compatibility option “fullscreen optimizations,” along with the existing “Game bar” and “Game DVR” options.

To guarantee extraneous mouse movements didn’t throw off input reads during rapid clicks, masking tape was placed over the sensor of the modified test mouse (Deathadder Chroma), and a second mouse (Deathadder Elite) was used to navigate the game menus and get into place for sample capture.

To emulate lower maximum refresh rates on the native 240Hz Acer Predator XB252Q, “Preferred refresh rate” was set to “Application-controlled” when G-SYNC was enabled, and the refresh rate was manually adjusted as needed in the game options (Overwatch), or on the desktop (CS:GO) before launch.

And, finally, to validate and track the refresh rate, framerate, and the syncing solution in use for each scenario, the in-game FPS counter, Nvidia Control Panel’s G-SYNC Indicator, and the display’s built-in refresh rate meter were active at all times.

Testing was performed with a Casio Exilim EX-ZR200 capable of 1000 FPS high speed video capture (accurate within 1ms), and a Razer Deathadder Chroma modified with an external LED (credit goes to Chief Blur Buster for the mod), which lights up on left click, and has a reactive variance of <1ms.

To compensate for the camera’s low 224×64 pixel video resolution, a bright image with stark contrast between foreground and background, and thin vertical elements that could easily betray horizontal movement across the screen, were needed for reliable discernment of first reactions after click.

For Overwatch, Genji was used due to his smaller viewmodel and ability to scale walls, and an optimal spot on the game’s Practice Range was found that met the aforementioned criteria. Left click was mapped to strafe left, in-game settings were at the lowest available, and “Reduced Buffering” was enabled to ensure the lowest input latency possible.

For CS:GO, a custom map provided by the Blur Busters Forum’s lexlazootin was used, which strips all unnecessary elements (time limits, objectives, assets, viewmodel, etc), and contains a lone white square suspended in a black void, that when positioned just right, allows the slightest reactions to be accurately spotted via the singular vertical black and white separation. Left click was mapped to look left, in-game settings were at the lowest available, and “Multicore Rendering” was disabled to ensure the lowest input latency possible.

For capture, the Acer Predator XB252Q (LED fixed to its left side) was recorded as the mouse was clicked a total of ten times. To average out differences between runs, this process was repeated four times per scenario, and each game was restarted after each run.

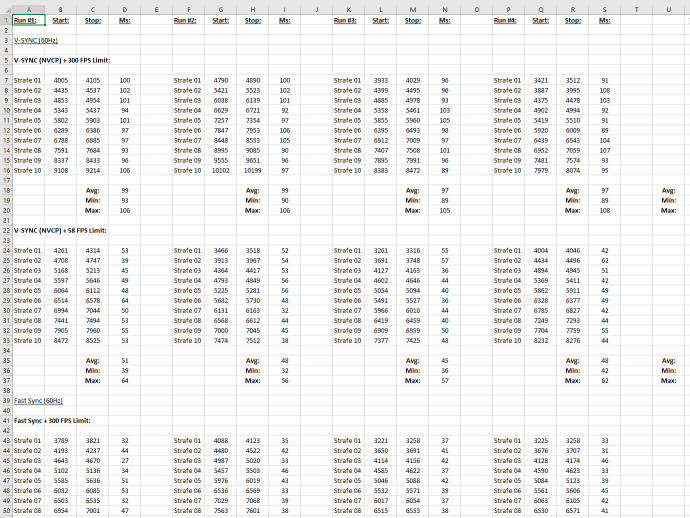

Once all scenarios were recorded, the .mov format videos, containing ten samples each, were inspected in QuickTime using its built-in frame counter and single frame stepping function via the arrows keys. The video was jogged through until the LED lit up, at which point the frame number was input into an Excel spreadsheet. Frames (thanks to 1000 FPS video capture, represent a literal 1ms each) were then stepped through until the first reaction was spotted on-screen, where, again, the frame number was input into the spreadsheet. This generated the total delay in milliseconds from left click to first on-screen reaction, and the process was repeated per video, ad nauseam.

All told, 508 videos weighing in at 17.5GB, with an aggregated (albeit slow-motion) 45 hour and 40 minute runtime, were recorded across 2 games and 6 refresh rates, containing a total of 42 scenarios, 508 runs, and 5080 individual samples. My original Excel spreadsheet is available for download here, and can also be viewed online here.

To preface, the following results and explanations assume that the native resolution w/default timings are in use on a single monitor in exclusive fullscreen mode, paired with a single-GPU desktop system that can sustain the framerate above the refresh rate at all times.

This article does not seek to measure the impact of input lag differences incurred by display, input device, CPU or GPU overclocks, RAM timings, disk drives, drivers, BIOS, OS, or in-game graphical settings. And the baseline numbers represented in the results are not indicative of, and should not be expected to be replicable on other systems, which will vary in configuration, specs, and the games being run.

This article seeks only to measuring the impact V-SYNC OFF, G-SYNC, V-SYNC, and Fast Sync, paired with various framerate limiters, have on frame delivery and input lag, and the differences between them; the results of which are replicable across setups.

+/- 1ms differences between identical scenarios in the following charts are usually within margin of error, while +/- 1ms differences between separate scenarios are usually measurable, and the error margin may not apply. And finally, all mentions of “V-SYNC (NVCP)” in the denoted scenarios signify that the Nvidia Control Panel’s “Vertical sync” entry was set to “On,” and “V-SYNC OFF” or “G-SYNC + V-SYNC ‘Off'” signify that “Use the 3D application setting” was applied w/V-SYNC disabled in-game.

So, without further ado, onto the results…

Input Lag: Not All Frames Are Created Equal

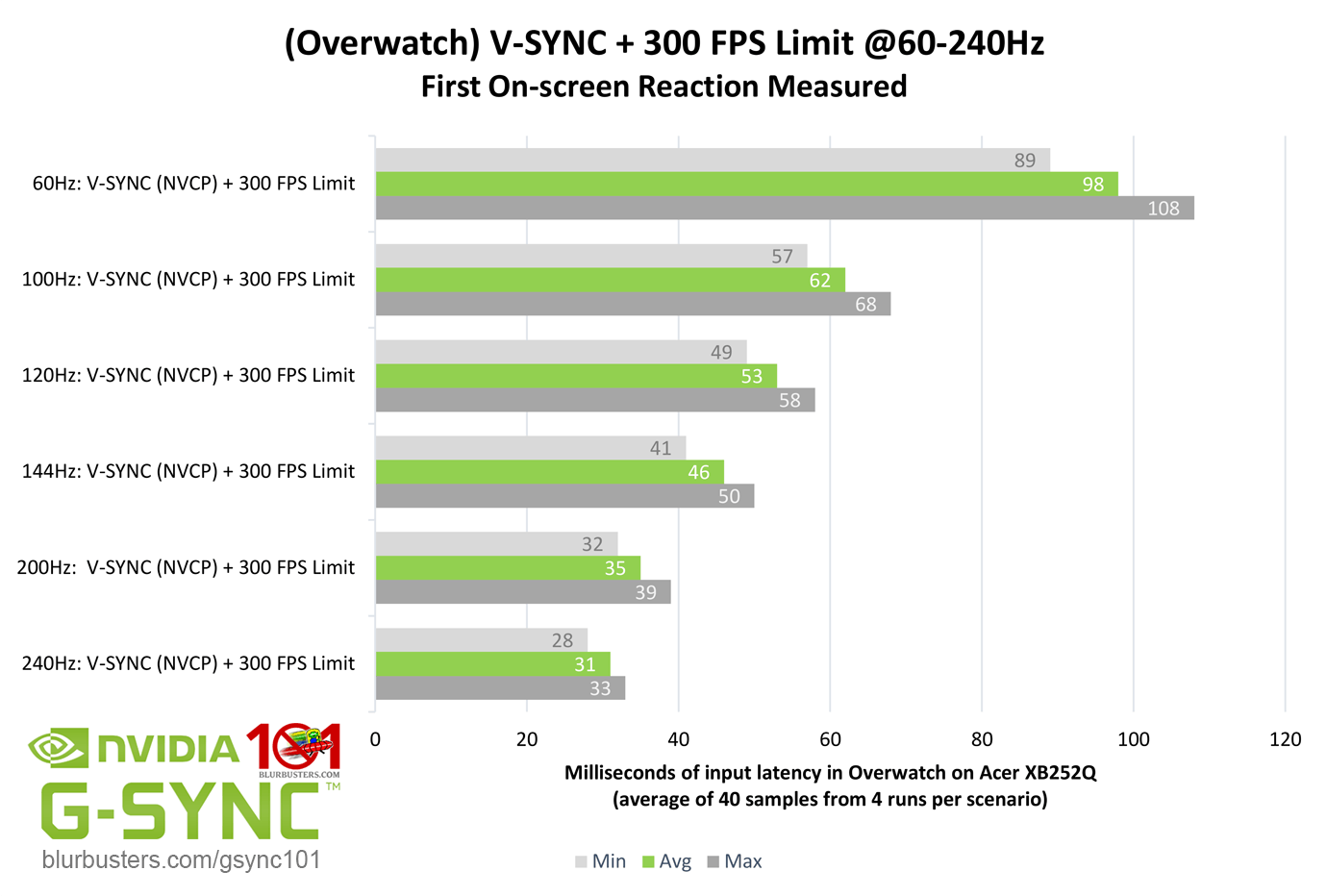

When it is said that there is “1 frame” or “2 frames” of delay, what does that actually mean? In this context, a “frame” signifies the total time a rendered frame takes to be displayed completely on-screen. The worth of a single frame is dependent on the display’s maximum native refresh rate. At 60Hz, a frame is worth 16.6ms, at 100Hz: 10ms, 120Hz: 8.3ms, 144Hz: 6.9ms, 200Hz: 5ms, and 240Hz: 4.2ms, continuing to decrease in worth as the refresh rate increases.

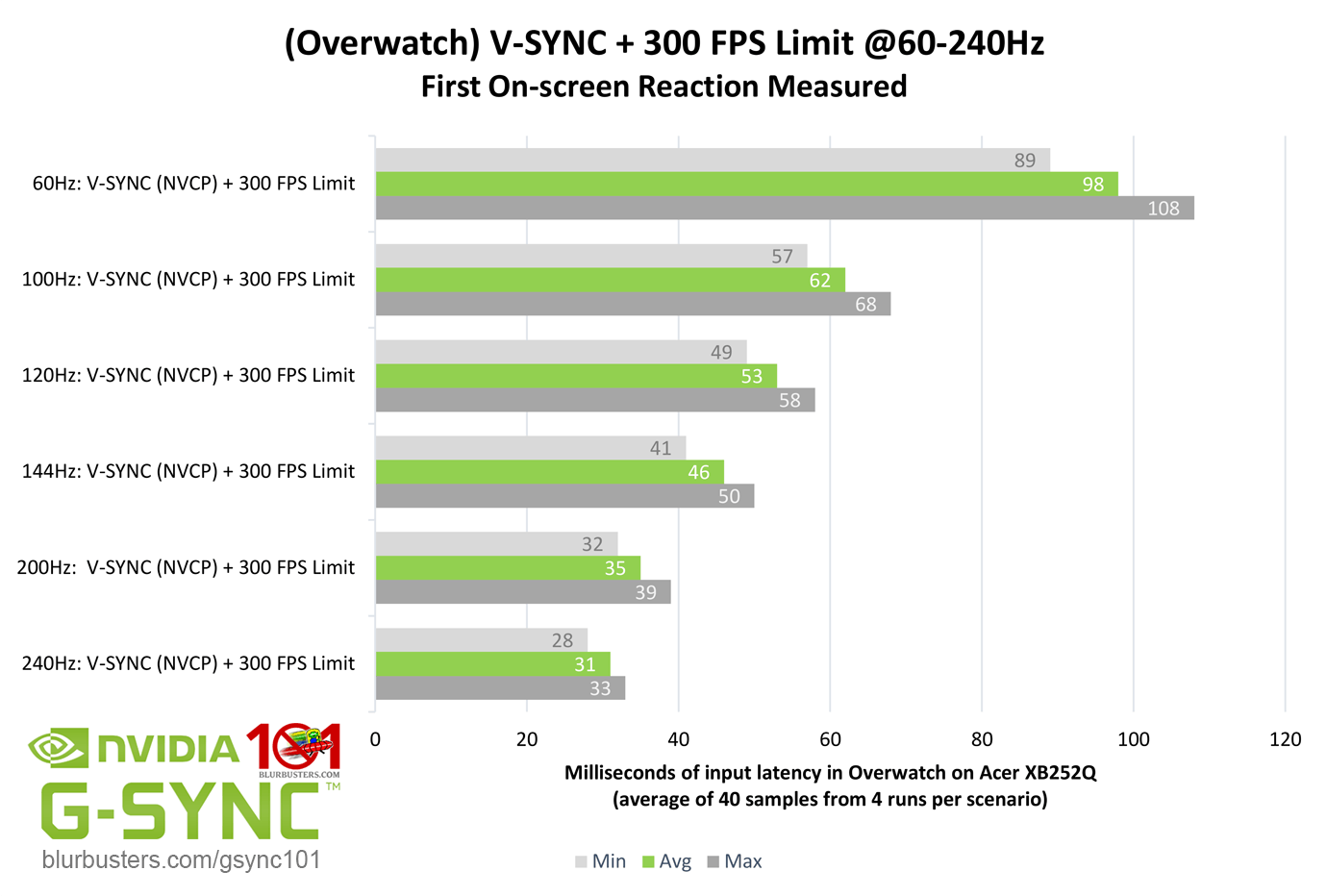

With double buffer V-SYNC, there is typically a 2 frame delay when the framerate exceeds the refresh rate, but this isn’t always the case. Overwatch, even with “Reduced Buffering” enabled, can have up to 4 frames of delay with double buffer V-SYNC engaged.

The chart above depicts anywhere from 3 to 3 1/2 frames of added delay. At 60Hz, this is significant, at up to 58.1ms of additional input lag. At 240Hz, where a single frame is worth far less (4.2ms), a 3 1/2 frame delay is comparatively insignificant, at up to 14.7ms.

In other words, a “frame” of delay is relative to the refresh rate, and dictates how much or how little of a delay is incurred per, a constant which should be kept in mind going forward.