Beyond the Limits of the Scanout

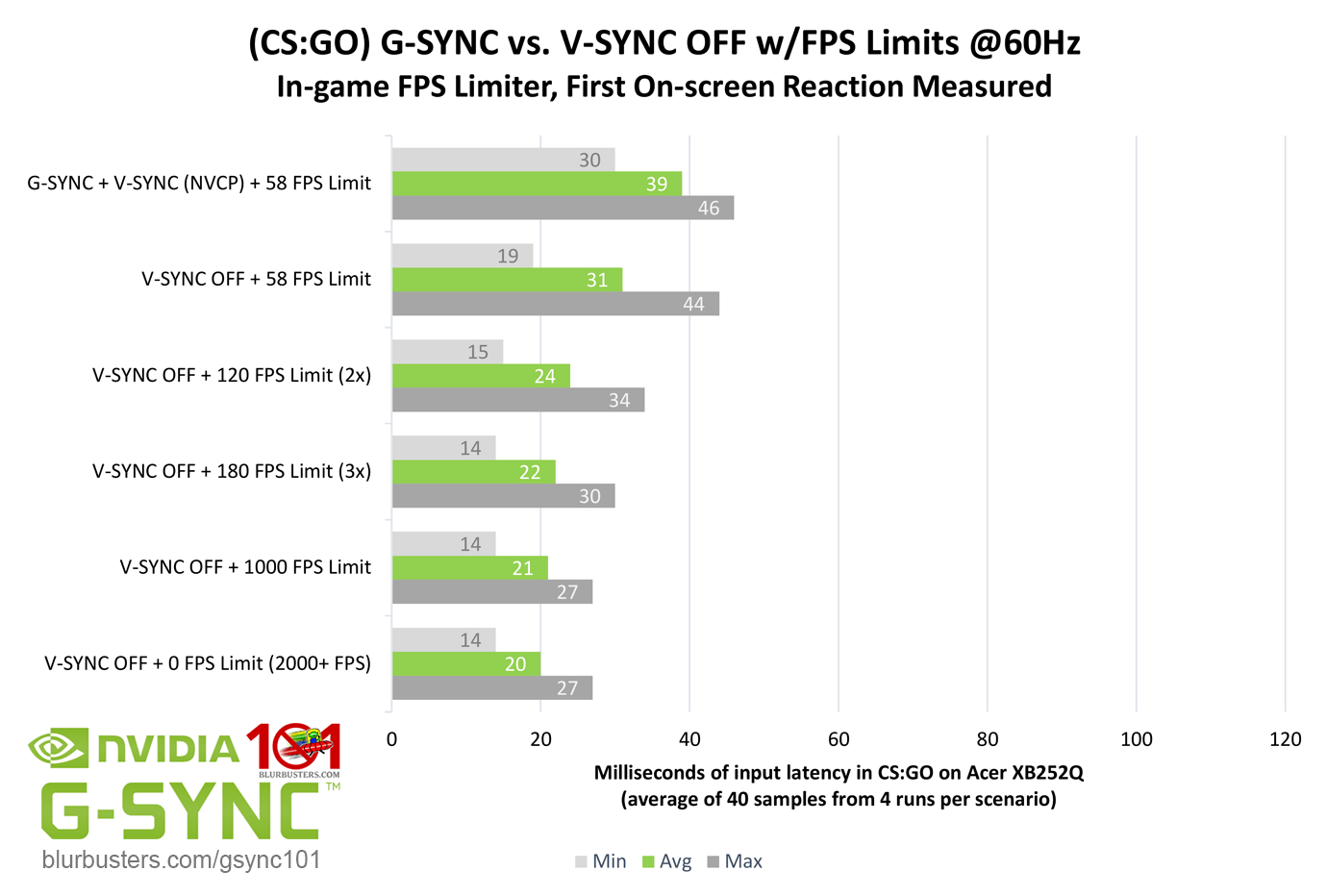

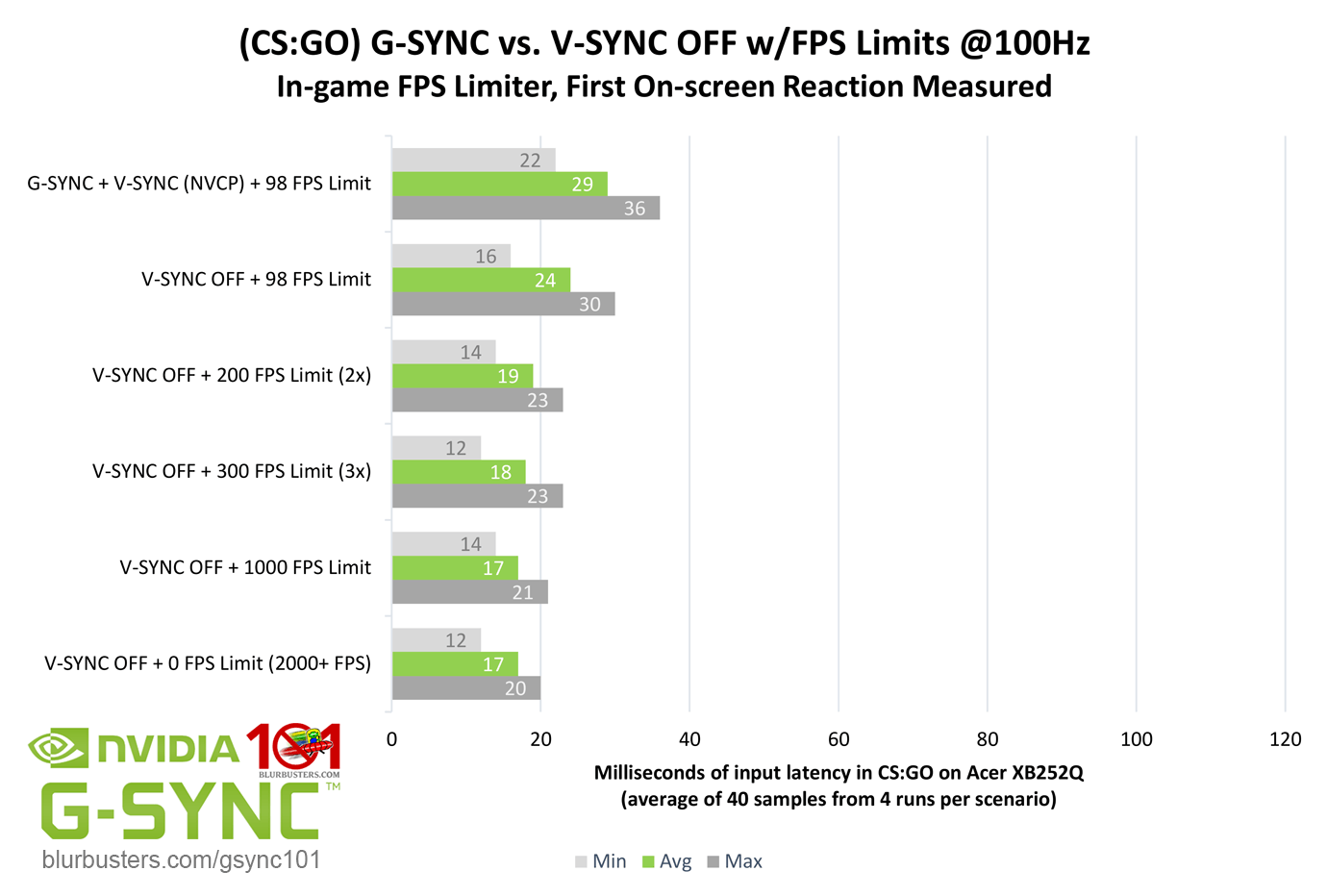

It’s already been established that single, tear-free frame delivery is limited by the scanout, and V-SYNC OFF can defeat it by allowing more than one frame scan per scanout. That said, how much of an input lag advantage can be had over G-SYNC, and how high must the framerate be sustained above the refresh rate to diminish tearing artifacts and justify the difference?

Quite high. Counting first on-screen reactions, V-SYNC OFF already has a slight input lag advantage (up to a 1/2 frame) over G-SYNC at the same framerate, especially the lower the refresh rate, but it actually takes a considerable increase in framerate above the given refresh rate to widen the gap to significant levels. And while the reductions may look significant in bar chart form, even with framerates in excess of 3x the refresh rate, and when measured at middle screen (crosshair-level) only, V-SYNC OFF actually has a limited advantage over G-SYNC in practice, and most of it is in areas that one could argue, for the average player, are comparatively useless when something such as a viewmodel’s wrist is updated 1-3ms faster with V-SYNC OFF.

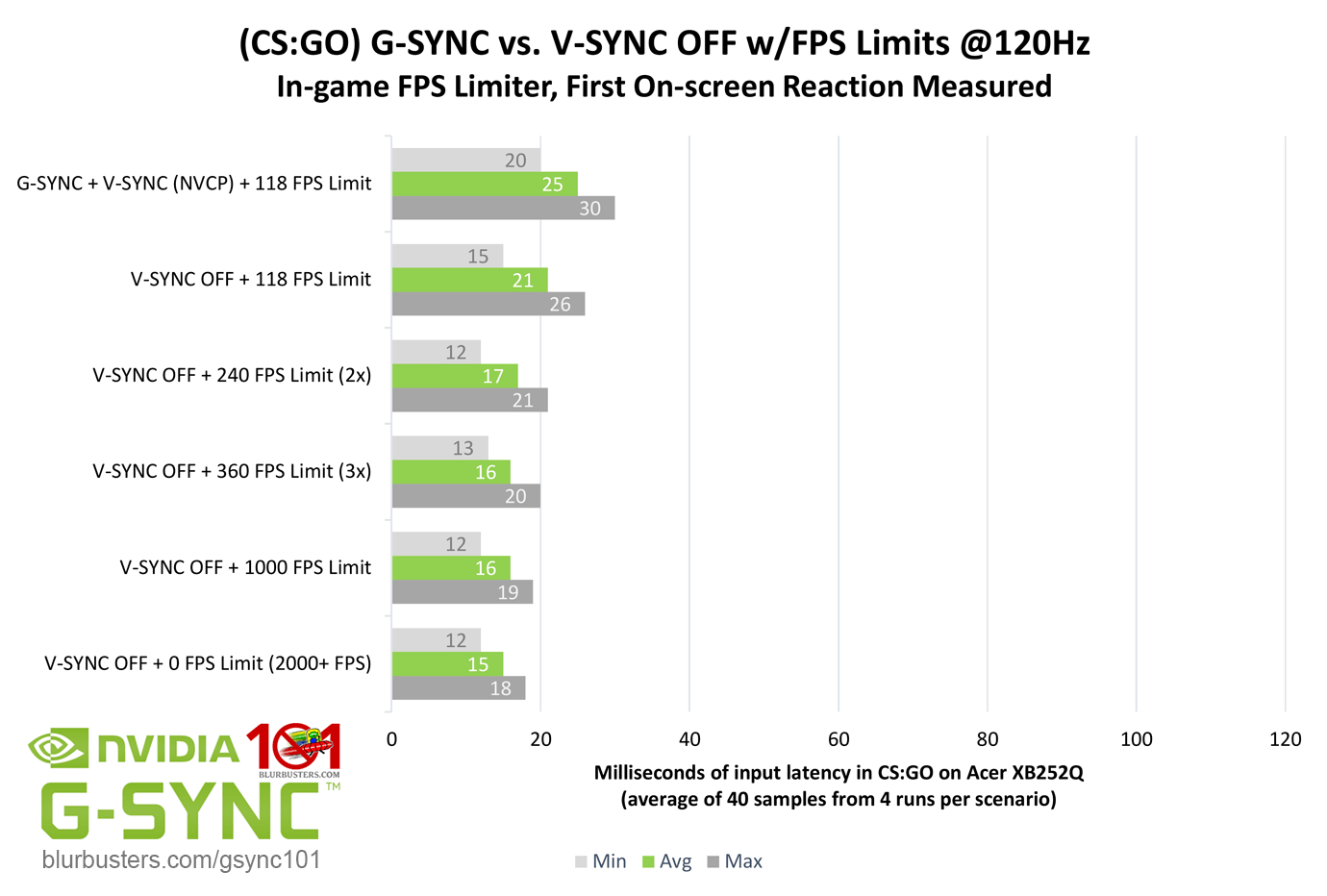

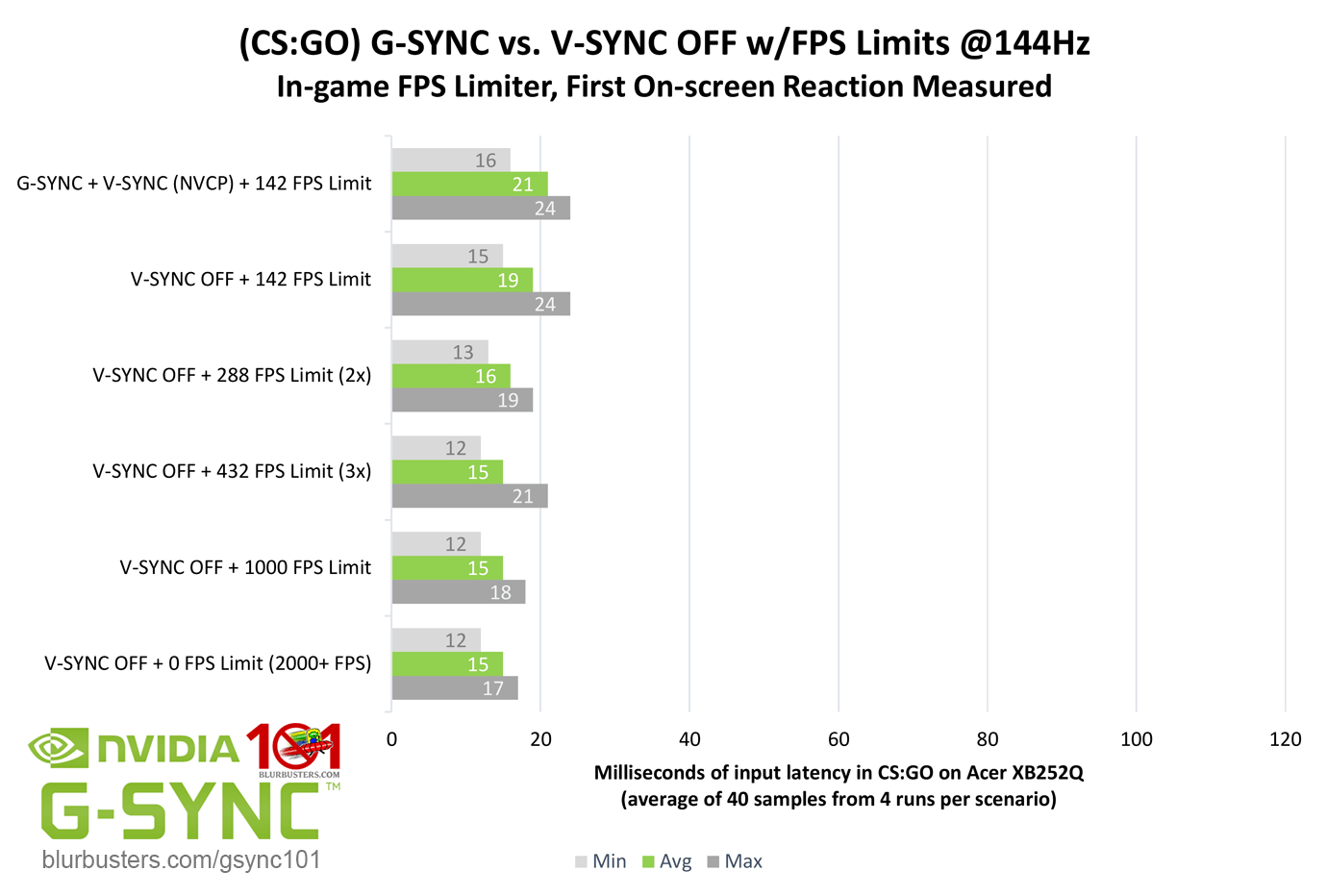

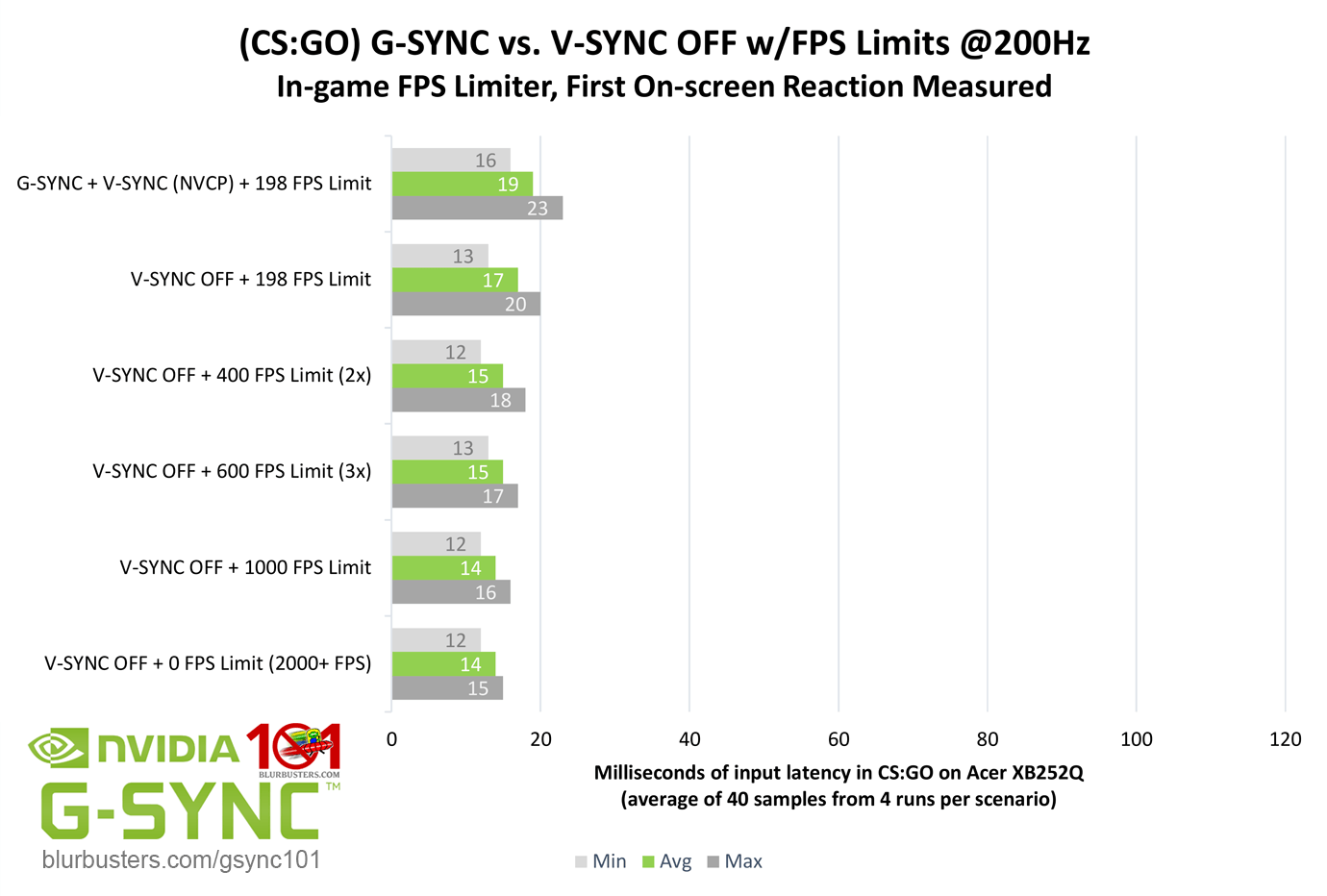

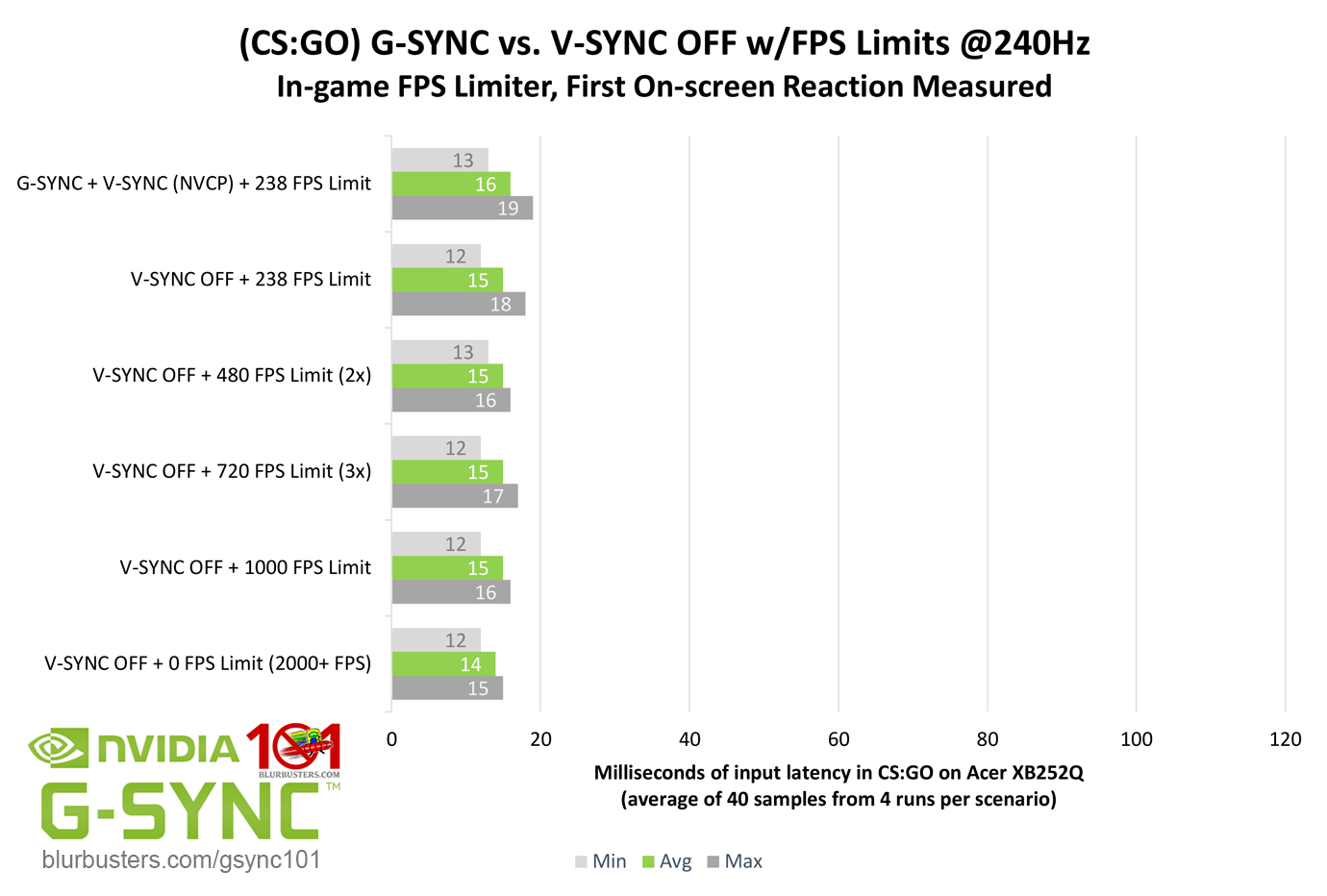

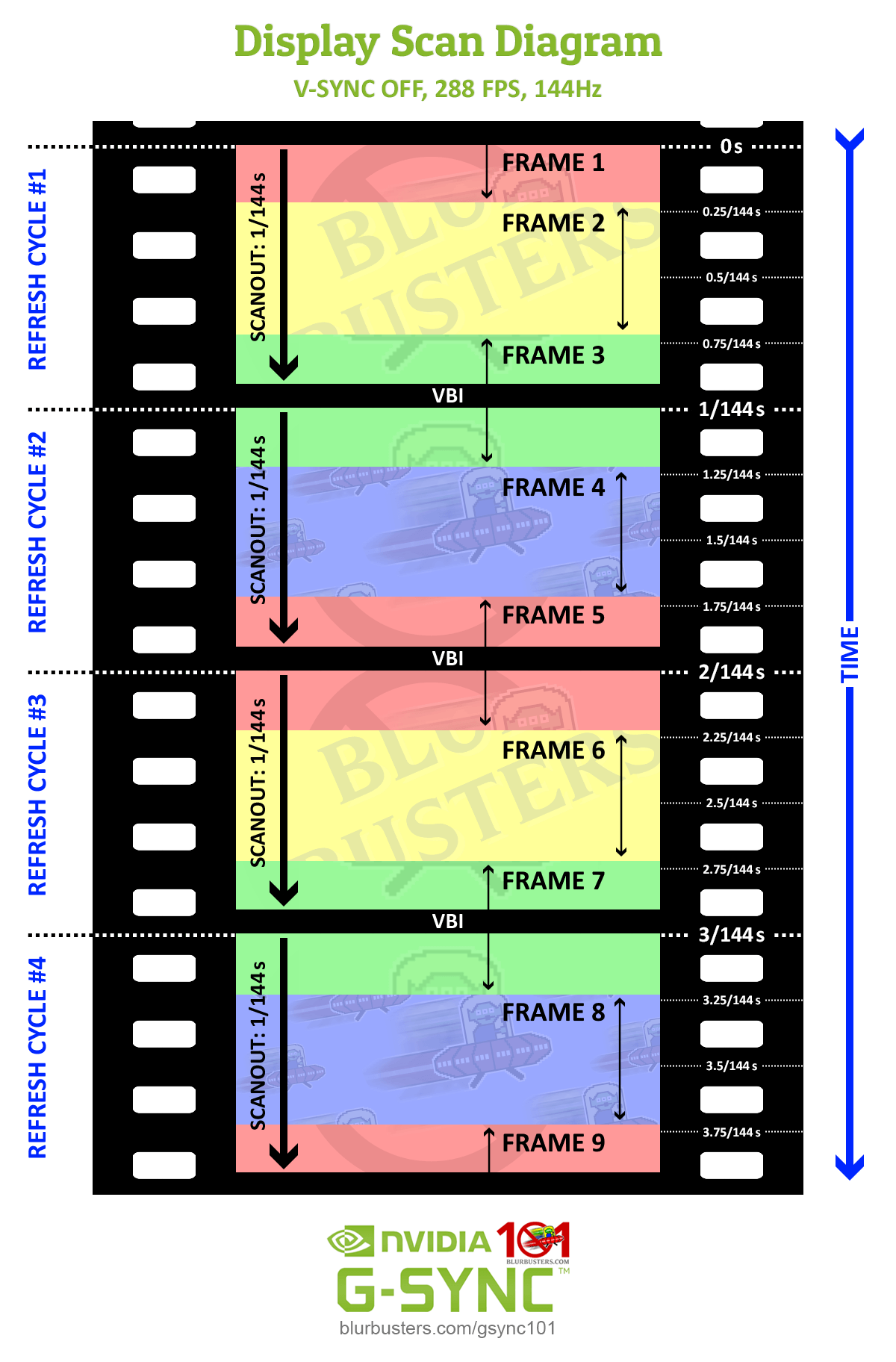

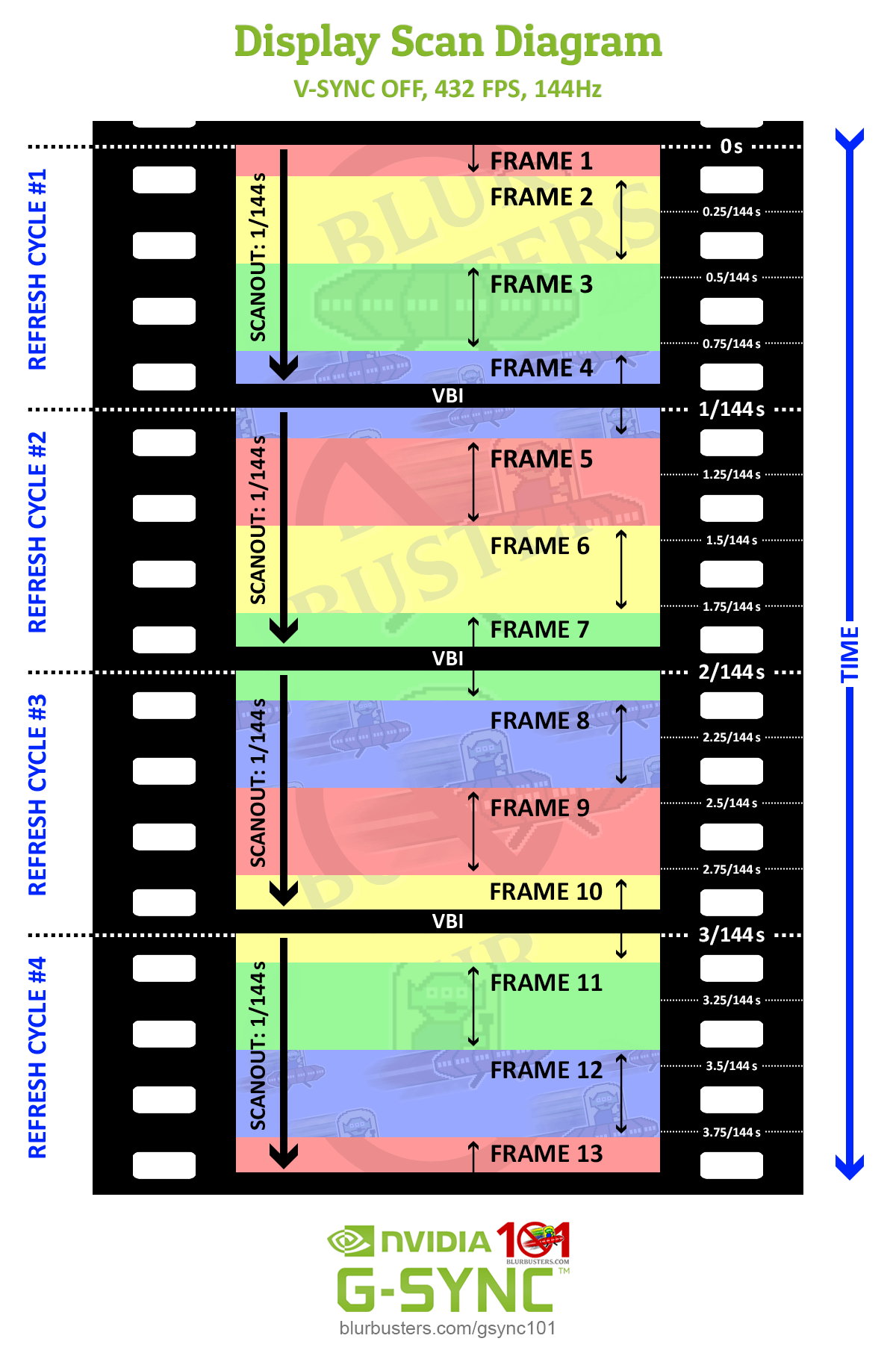

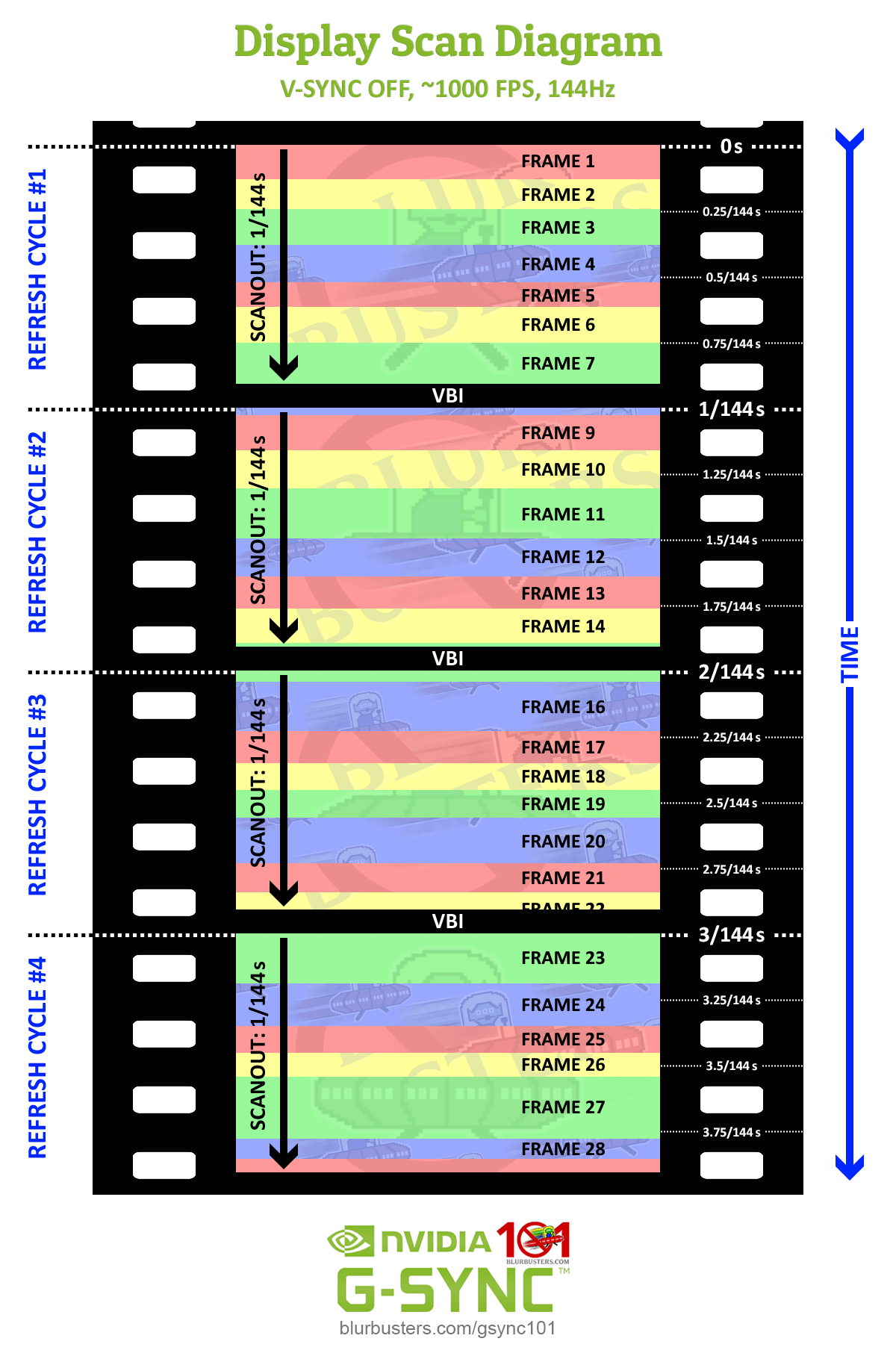

This is where the refresh rate/sustained framerate ratio factors in:

As shown in the above diagrams, the true advantage comes when V-SYNC OFF can allow not just two, but multiple frame scans in a single scanout. Unlike syncing solutions, with V-SYNC OFF, the frametime is not paced to the scanout, and a frame will begin scanning in as soon as it’s rendered, regardless whether the previous frame scan is still in progress. At 144Hz with 1000 FPS, for instance, this means with a sustained frametime of 1ms, the display updates nearly 7 times in a single scanout.

In fact, at 240Hz, first on-screen reactions became so fast at 1000 FPS and 0 FPS, that the inherit delay in my mouse and display became the bottleneck for minimum measurements.

So, for competitive players, V-SYNC OFF still reigns supreme in the input lag realm, especially if sustained framerates can exceed the refresh rate by 5x or more. However, while at higher refresh rates, visible tearing artifacts are all but eliminated at these ratios, it can instead manifest as microstutter, and thus, even at its best, V-SYNC OFF still can’t match the consistency of G-SYNC frame delivery.