Identical or Fraternal?

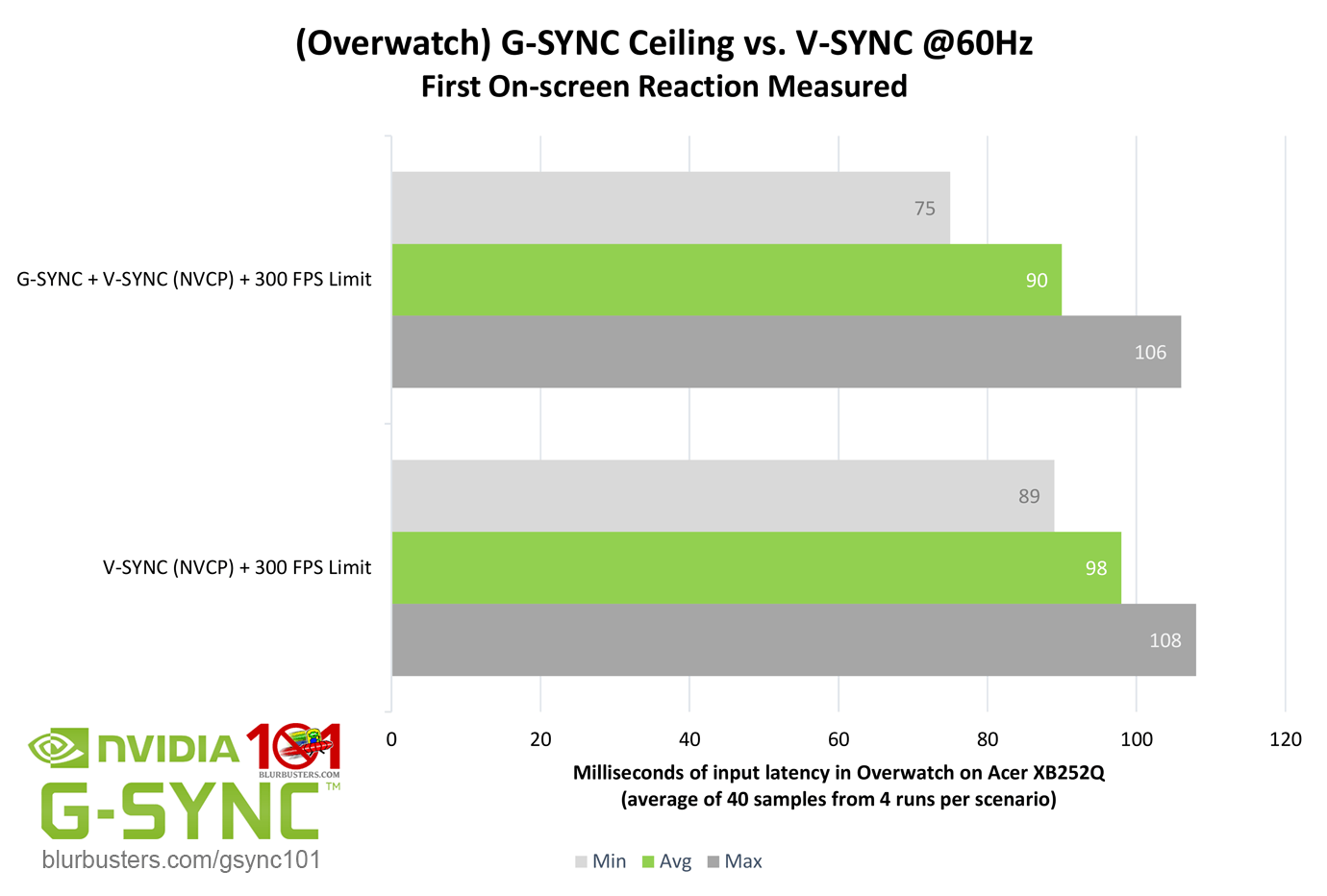

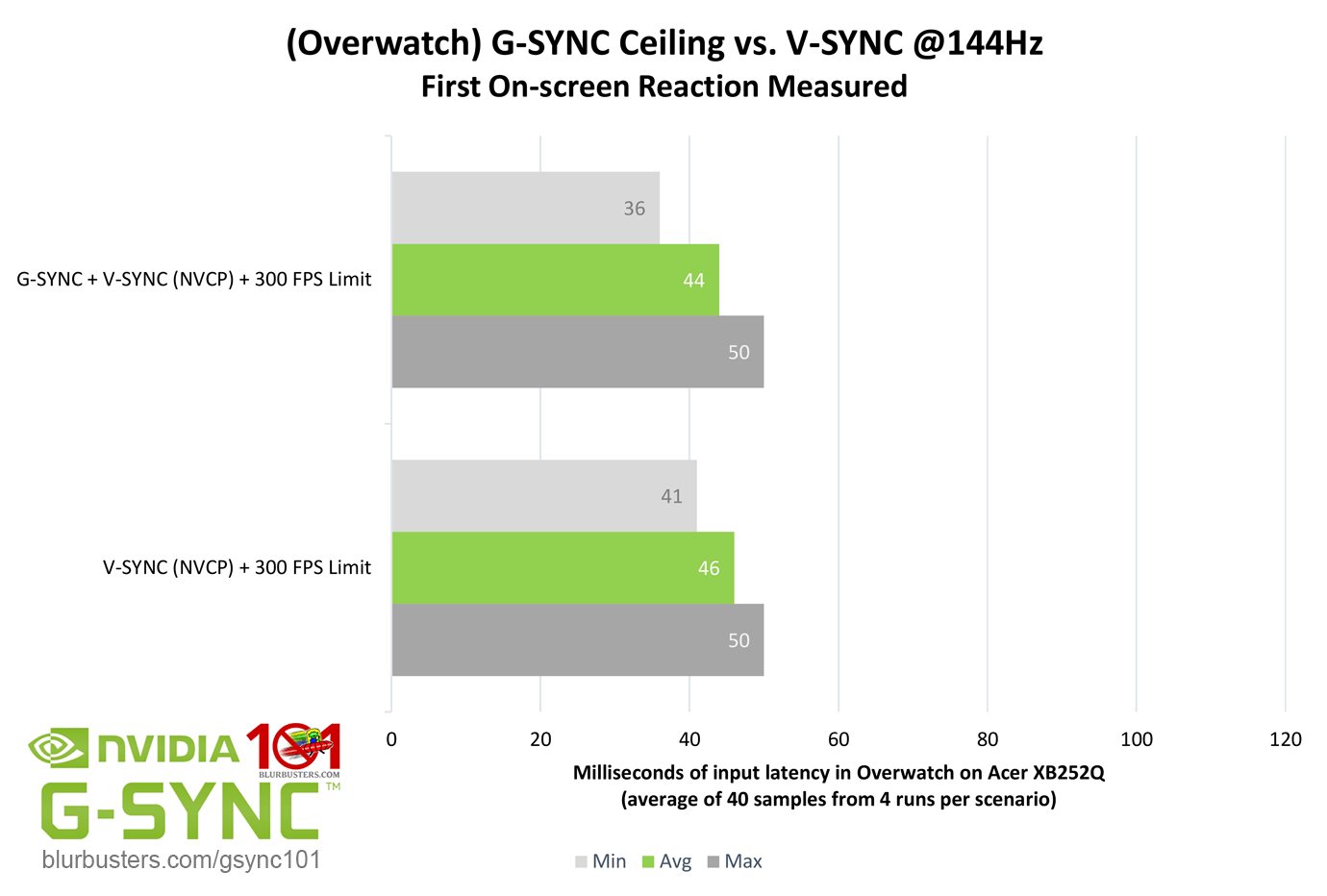

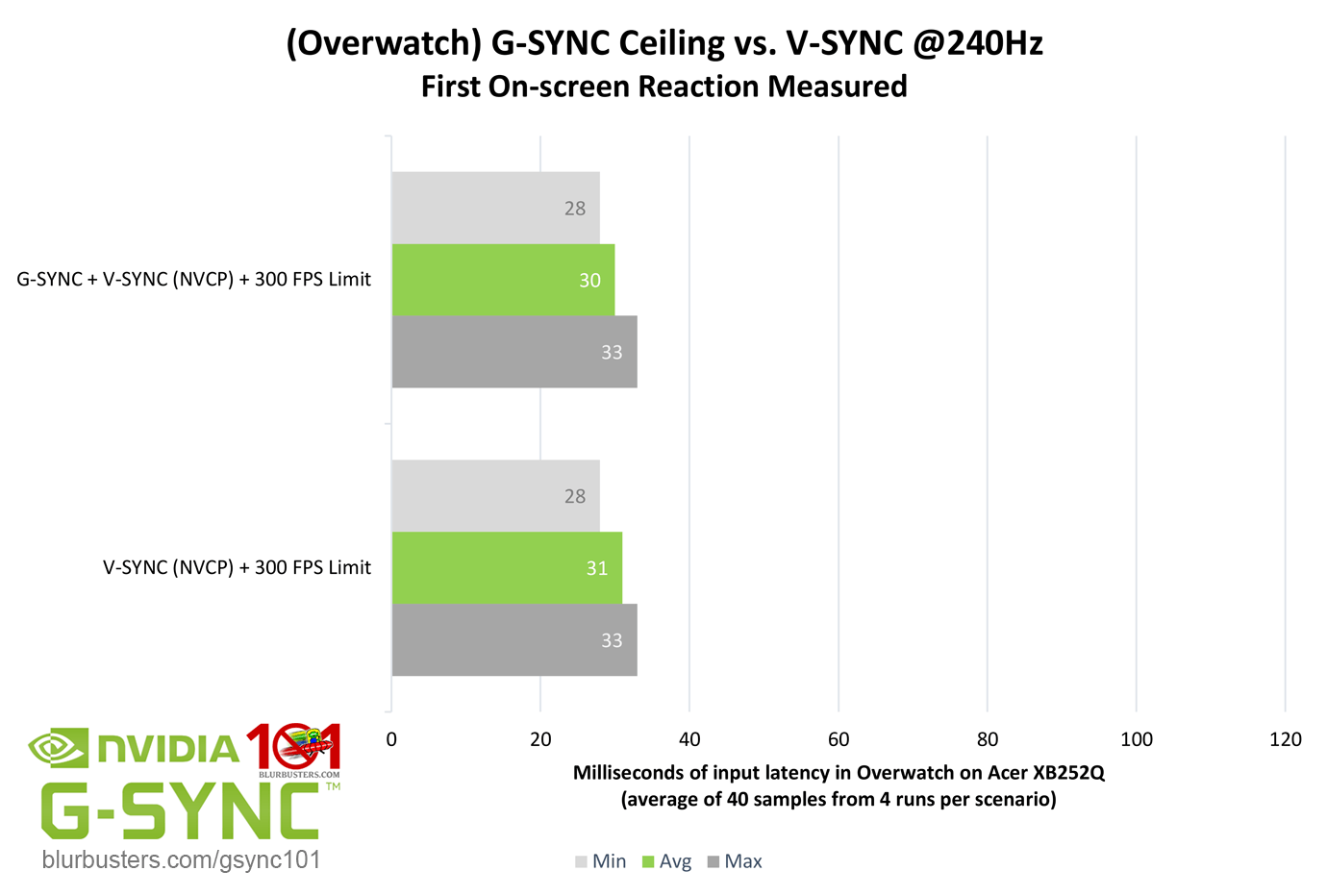

As described in G-SYNC 101: Range, G-SYNC doesn’t actually become double buffer V-SYNC above its range (nor does V-SYNC take over), but instead, G-SYNC mimics V-SYNC behavior when it can no longer adjust the refresh rate to the framerate. So, when G-SYNC hits or exceeds its ceiling, how close is it to behaving like standalone V-SYNC?

Pretty close. However, the G-SYNC numbers do show a reduction, mainly in the minimum and averages across refresh rates. Why? It boils down to how G-SYNC and V-SYNC behavior differ whenever the framerate falls (even for a moment) below the maximum refresh rate. With double buffer V-SYNC, a fixed frame delivery window is missed and the framerate is locked to half the refresh rate by a repeated frame, maintaining extra latency, whereas G-SYNC adjusts the refresh rate to the framerate in the same instance, eliminating latency.

As for “triple buffer” V-SYNC, while the subject won’t be delved into here due to the fact that G-SYNC is based on a double buffer, the name actually encompasses two entirely separate methods; the first should be considered “alt” triple buffer V-SYNC, and is the method featured in the majority of modern games. Unlike double buffer V-SYNC, it prevents the lock to half the refresh rate when the framerate falls below it, but in turn, adds 1 frame of delay over double buffer V-SYNC when the framerate exceeds the refresh rate; if double buffer adds 2-6 frames of delay, for instance, this method would add 3-7 frames.

“True” triple buffer V-SYNC, like “alt,” prevents the lock to half the refresh rate, but unlike “alt,” can actually reduce V-SYNC latency when the framerate exceeds the refresh rate. This “true” method is rarely used, and its availability, in part, can depend on the game engine’s API (OpenGL, DirectX, etc).

A form of this “true” method is implemented by the DWM (Desktop Window Manager) for borderless and windowed mode, and by Fast Sync, both of which will be explained in more detail further on.

Suffice to say, even at its worst, G-SYNC beats V-SYNC.