At the 2018 Game Developers Conference, Microsoft has announced DXR – the DirectX Raytracing API for DirectX 12 with real time ray tracing.

NVIDIA and Remedy Entertainment has an impressive video of real time ray racing, on NVIDIA Volta hardware, with photorealistic reflections and ambient diffuse lighting, for upcoming 3D graphics:

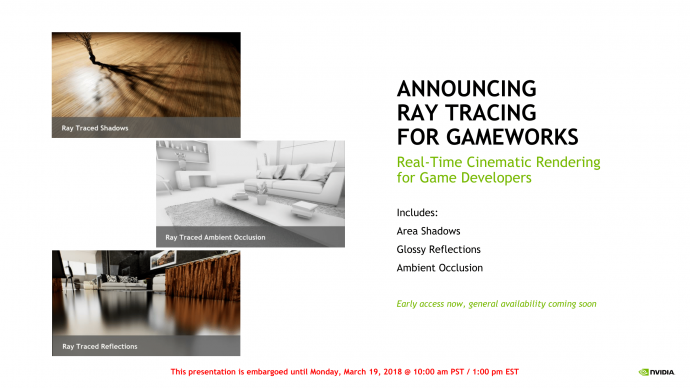

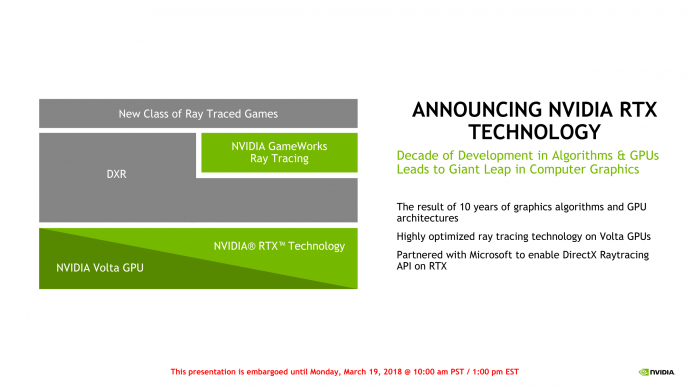

NVIDIA has announced Real-Time Cinematic Rendering with NVIDIA RTX Technology, utilizing Micorosoft’s DirectX Raytracing API.

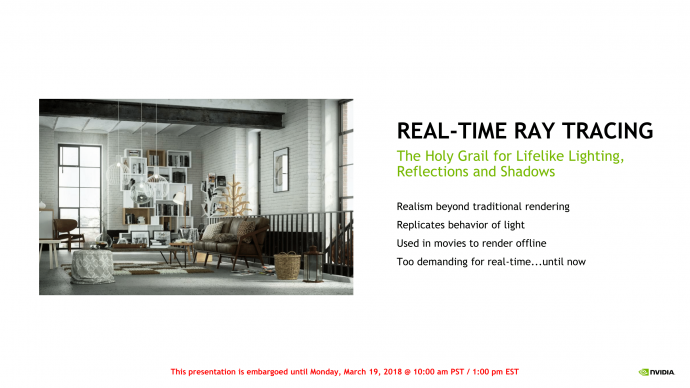

Long considered the definitive solution for realistic and lifelike lighting, reflections and shadows, ray tracing offers a level of realism far beyond what is possible using traditional rendering techniques. Real-time ray tracing replaces a majority of the techniques used today in standard rendering with realistic optical calculations that replicate the way light behaves in the real world, delivering more lifelike images.

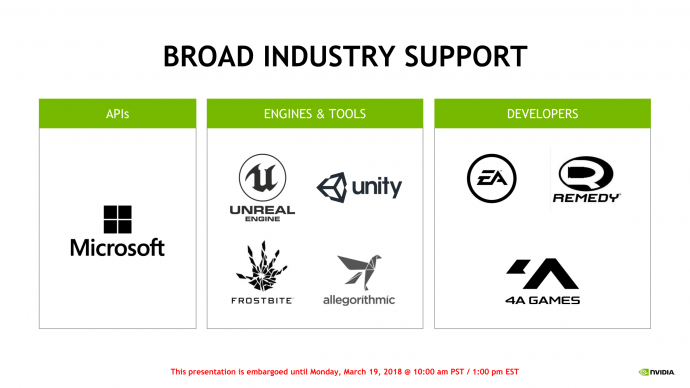

AMD also followed suit, announcing Radeon ProRender which works off Microsoft DirectX Raytracing as well, signalling broad industry support for real time raytracing APIs.

Real time raytracing features are coming to multiple game engines, including Frostbite, SEED, Unreal, Unity, at least initially as as a way to improve lighting and shadows in traditional rasterizer 3D graphics.

Electronic arts has a video on Project PICA – Real-time Raytracing Experiment using DirectX Raytracing. This uses SEED’s Halycon research engine, running on NVIDIA Volta, and demonstrates a lot of impressive lighting effects in real time:

Microsoft succinctly says the way we do 3D graphics is a hack:

For the last thirty years, almost all games have used the same general technique—rasterization—to render images on screen. While the internal representation of the game world is maintained as three dimensions, rasterization ultimately operates in two dimensions (the plane of the screen), with 3D primitives mapped onto it through transformation matrices.

For those not familiar to Raytracing, Microsoft’s article defines it very well:

Raytracing calculates the color of pixels by tracing the path of light that would have created it and simulates this ray of light’s interactions with objects in the virtual world. Raytracing therefore calculates what a pixel would look like if a virtual world had real light. The beauty of raytracing is that it preserves the 3D world and visual effects like shadows, reflections and indirect lighting are a natural consequence of the raytracing algorithm, not special effects.

NVIDIA scientist Morgan McGuire also wrote excellent guest articles to RoadToVR, about real-time ray tracing and beam tracing, How NVIDIA Research is Reinventing the DIsplay Pipeline for the Future of VR (Part1, Part2), including a goal of 240 frames per second operation.

And lastly, a Star Wars special:

Over the long term, it will be exciting to see how graphics will improve with real time ray tracing, hopefully without frame rate degradation, for “Better Than 60 Hz” displays!