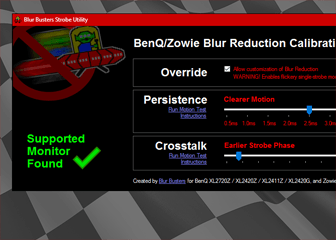

One small step closer to tomorrow’s Holodeck: NVIDIA G-SYNC! It is a technique for refreshing computer monitors at variable intervals (up to a certain limit). Instead of refreshing monitors at exact intervals, the monitor is refreshed when the GPU finishes generating a frame! [Image: AnandTech liveblog of launch event]

Variable refresh rates combines advantages of VSYNC ON (eliminate tearing) with the advantages of VSYNC OFF (low input lag), while virtually eliminating stutters (no mismatch between frame rate and refresh rate).

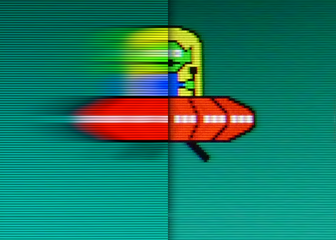

It is confirmed that this, alone, won’t eliminate motion blur as completely as strobe backlights (e.g. LightBoost), but this is a great step towards eliminating discrete refresh rates (which creates motion blur even at 144Hz. See photos of 60Hz vs 120Hz vs LightBoost), especially as the 144Hz limit is raised in the future, while using nVidia G-SYNC. Hopefully strobe backlight technologies can be combined with G-SYNC in the future — and hopefully already in some upcoming models.

EDIT:

– All G-SYNC monitors include an official strobe backlight mode, better than LightBoost!

– Mark Rejhon has quickly come up with a new method of dynamically blending PWM-free backlight at lower framerates to strobing at higher framerates; see addendum to Electronics Hacking: Creating a Strobe Backlight. This allows combining LightBoost + G-SYNC without creating flicker during lower framerates!